Construction

Note: This wiki is a work in progress, and may contain missing content, errors, or duplication.

Contents

- 1 Introduction

- 2 Goals and Guiding Principles

- 3 Context Diagram

- 4 Preparation for Construction

- 5 Developing the Physical Design

- 6 Planning and Managing the Development

- 7 Developing the Application

- 8 Testing, Verifying, and Validating the Solution

- 9 Handing off the Solution

- 10 Summary

- 11 Key Competence Frameworks

- 12 Key Roles

- 13 Standards

- 14 References

1 Introduction

Once the requirements for a solution have been agreed upon and analyzed, the development team can start constructing the solution based upon the conceptual and logical designs. This phase can be short and relatively simple, or it can be relatively long and complex. Best practices include constructing, testing, and integrating the pieces as they emerge. In any case, it requires strong technical, and software and service project management skills.

All solution construction needs go through a Plan > Do > Study (Test) > Act/(Adjust) cycle to ensure continuous improvement and maturity of the solution and the processes associated with it. When this cycle happens continuously, it leads to faster development cycles and better outcomes. [14] Most agile development methodologies embody this concept in their cores. Throughout the construction phase, the team needs to remember that the goal of the project is to be able to integrate the solution into the users’ production environment as seamlessly as possible. And, when we say integration, we mean integration with pre-existing software, hardware, databases, and personnel workflow. Integration into the destination production environment always needs to be in the forefront of the developers’ mind.

It is also critical for the development team to build to the enterprise’s architectural standards (Chapter 1) and best practices. If all goes according to plan, the solution will be handed off to the transition team who will executed the integration of the solution into the existing operations environment. Today, DevOps approaches provide a good model for transitioning

2 Goals and Guiding Principles

The construction phase is typically the hardest phase of the solution lifecycle. This is due to several things. First, the construction team must find and resolve all previously unsurfaced issues with the requirements and logical design of the solution. Often these issues arise while coding or hardware configuration is in progress. Second, the construction team must keep their eye firmly on all the critical EIT quality attributes while they are constructing, such as interoperability, security, quality, disaster preparedness, and supportability. Quality attributes are requirements and should be prioritized accordingly, with parameters provided as release requirements for testing.

The goals of the solution construction phase are actually quite daunting:

- Construct a solution that satisfies all functional and non-functional requirements.

- Construct a solution that is accepted by target users and management.

- Construct a solution that is sufficiently well tested and bug free.

- Construct a solution that EIT Operations can easily support.

- Construct a solution that interoperates with and integrates well into existing computational, operational, and physical environments.

- Ensure that new EIT services can be integrated into business operations.

- Ensure that the solution is well documented for users (including end users and Operations staff).

- Leave enough documentation about the solution’s construction to enable successors to fix, modify, and enhance the solution and stay within the intent of the logical and technical design.

There are a several important principles that serve every development team during the construction phase:

- Design is not coding; coding is not design. Both are important, but you need to do the design, not just sit down and code. The design process should be thorough enough to avoid the need for re-engineering and recoding. A thorough design pays for itself. This principle is true whether you are using a waterfall, prototyping iterative spiral, rapid, extreme, or agile development methodology. That is why many agile methodologies require upfront architecture design.

- Minimize complexity. The KISS Principle (keep it simple stupid) was popular in the 1970s, but its idea is still important. Although it applies to many engineering domains, it is extremely applicable to software. Simple designs are easier to build, debug, maintain, and change.

- Accommodate change. The only thing that one can count on in life is change. This is also true in developing EIT solutions. It is critical to design the solution to accommodate change, because it will be required.

- Test early and often. There have been a number of studies that have shown that the cost of having and fixing a bug in code increases significantly the longer the bug exists. The cost of fixing the find bugs increases as the code progresses through each stage: in the hands of the developer, in the hands of the testing and debugging team, in transition, and in operations. Only one thing helps, catch bugs as soon as possible.

- Integrate code early and often. Software integration needs to be continuous, not something that happens at the very end. Software integration should be managed via configuration control tools to maintain the integrity of the code base as it evolves.

- Build-in quality and security. Assess the design/solution for meeting requirements for quality attributes, including interoperability, maintainability and security as it is being created, not after the fact.

3 Context Diagram

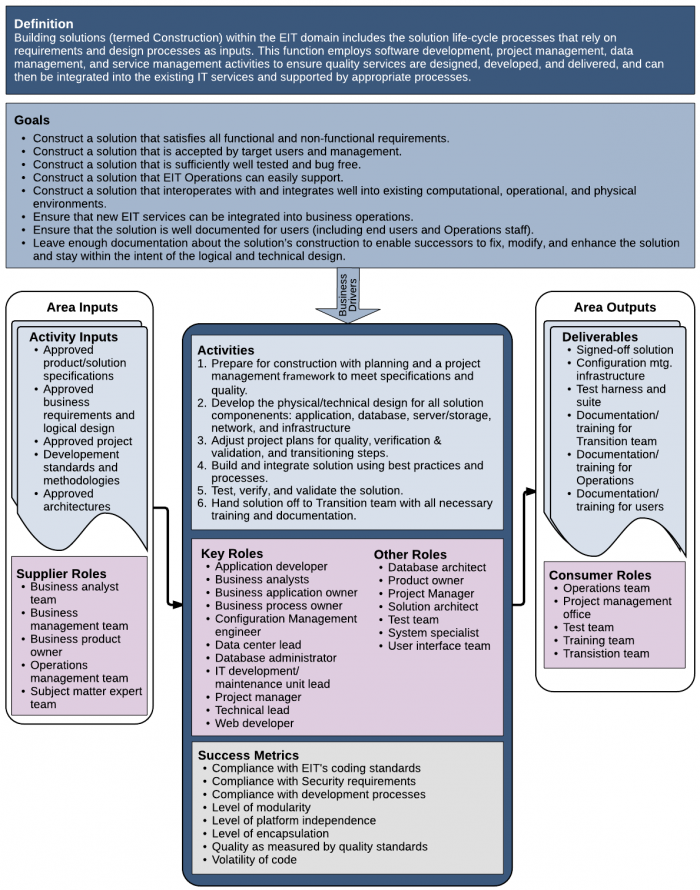

Figure 10.1 Context Diagram Construction4 Preparation for Construction

One would think that the Requirements Analysis and Logical Design phase (Chapter 9) is the preparation required for construction to proceed. It does (or maybe should) provide all the necessary background information; however, the construction team may not be the team that prepared the requirements and logical design artifacts, so it is critical for the construction team to become familiar with the requirements as developed.

The team needs to prepare for construction to:

- Get the solution right

- Understand completely what each team member needs to accomplish

- Establish important relationships with users and stakeholders up front

- Understand each construction team member responsibilities

There are several critical activities that should occur during the preparation phase:

- Collect and review all Requirements outputs/artifacts, including Use Cases

- Understand the relationships among requirements

- Meet with stakeholders and users to confirm understanding.

- Select an appropriate development methodology

4.1 Collect and Review Requirements

The goal of the Requirements Analysis and Logical Design activities is to understand and document all the requirements sufficiently for the consumers of the artifacts (developers, testers, user documentation writers, transition team, and Operations team) to be able to do their jobs. The requirements must be sufficient to develop a logical design that the construction team can turn into a physical design. The construction team needs to become intimately familiar with all the artifacts that the requirements team has generated.

Because of their fresh eyes and different (implementation) perspective, the construction team will often find ambiguous requirements that need to restated. They might find conflicting requirements that need to be resolved. And, they might also find incomplete or missing requirements (often called “derived requirements”) that need to be added to the list. In the best of all worlds, these issues are found during the preparation phase rather than while programmers are coding or users are testing.

4.2 Review Use Cases

If the requirements team did their job well, there should be a number of use cases. These use cases indicate in words or pictures how the system should behave. It is critical for the construction team to review all use cases in detail. The goal is to have the solution behave like the use cases and to identify where it might not be able to. Use cases are often the best measure of whether the construction team is doing their job right. In addition, use cases might implicate the need for particular hardware or software resources to enable construction of the solution. For example, a use case of a mobile app might indicate the need for a particular feature, such as GPS or fingerprint security that limits the devices to those that have the built-in features.

In addition, use cases are often excellent guides for developing test cases for the constructed solution. Note that during the use case review process, the construction team often identifies “holes” in the use cases. That is, situation that were not covered or options that were not explored. The construction team needs to take careful note of the use case issues. They might be required to add use cases and get them verified by the SME or stakeholders; or, the construction or test team might make notes that will result in more test cases.

4.3 Meet with Stakeholders and Users

Too often, developers want to hide in the back room while constructing the solution. Then, when they think they are done, they reveal the “finished” solution to the awe and applause of the users. Unfortunately, this is not a good tactic, because it rarely results in the awe and applause that the developers are seeking. Surprise! What do you mean this doesn’t work the way the users want it to? Involving the stakeholders and users throughout the construction process is essential. Even though the requirements team did their job and showed them all the designs, there is just something different about seeing the thing work for real, even if it is just a prototype. User’s don’t get it until the solution is there in front of them.

Developing a good relationship with representatives of the user community is critical. Starting the process at the beginning of the construction phase often has the best results. If possible, identify a couple of friendly individuals who are not intimidated by technology and who are excited to try out the solution. Get to know their perspective about what the system needs to do and how it needs to work to help them do their job. Understand how they want to be involved in the process. Determine if they are willing to try out prototypes or be beta testers.

Also, find the right people in your user community and stakeholders to figure out how to stage the rollout of the solution. It is true that some solutions can be constructed and rolled out in one wave of the magic wand; however, most solutions are best constructed and tested in stages – each stage resulting in an increment that adds new features. Stakeholders and users often have the best ideas of what should be included in each increment and how many stages make sense. They know what makes a system whole and worth running through an alpha or beta test.

4.4 Select a Development Methodology

A system or system development methodology is the process that is used to structure, plan, and control the process of developing a solution. Most enterprises adopt one development methodology for EIT projects (and in fact, maybe for all corporate projects) and each project is required to use that methodology. However, if a methodology is not prescribed by the EIT department, the project needs to select one. The most common are mentioned in the section on Development Methodologies.

5 Developing the Physical Design

During the physical design activities all the conceptual and logical specifications of the system are converted to descriptions of the solution in terms of software, databases, hardware, networks, as well as the connections between all of the parts that will result in the desired behavior. The physical or technical design doesn’t only specify the what is being implemented, but also how it will be implemented, including what programming languages and programming standards to use, the infrastructure to build, critical algorithms to implement, the systems support, and so on.

There are a number of rules of thumb that help keep the physical designers on track:

- The technical designers should minimize the intellectual distance between the software/hardware and the task as it exists in the real world, keeping the connection between the two as simple as possible. That is, the structure of the software design should (whenever possible) mimic the structure of the problem domain. Selecting the appropriate architecture pattern is crucial.

- Good designers consider alternative development approaches, judging each based on the requirements of the problem, the resources available to do the job, and the required performance.

- Good designers avoid reinventing the wheel. Systems are constructed using a set of design patterns, many of which have been encountered before. Selecting existing patterns that work well is always a better alternative to reinvention. Reuse typically minimizes development time and the amount of resources used to complete the project. Also, organizations that manifest a NIH (Not Invented Here) Syndrome often miss out on exceptional solutions that will save time and money.

The technical design needs to be carefully reviewed to minimize conceptual (semantic) errors that can occur translating the logical design into a technical one. Sometimes there is a tendency to focus on minutiae when the technical design is reviewed, missing the forest for the trees. A design team should ensure that major conceptual elements of the design (such as omissions, ambiguity, and inconsistency) have been addressed before worrying about the syntax of the design model.

Note that the technical design for the solution needs to be worked on by solution or enterprise architect associated with the construction team, even if the solution construction is going to be completely or partly outsourced.

Note: I need to add a solution component picture here. I have been looking on the net for something that would work, but haven’t found it yet. I will be working on it next. It will also be used later in the chapter.

5.1 Application Design

Developing an application these days can mean number of things, including developing:

- A client-based application, or fat-client

- A server-based application (likely with a thin client)

- A web-based application

- A mobile device application

An application can be interactive and thereby require a user interface, or it can be non-interactive (such as a utility program) and just perform its function in the background. In any case, the application design should include the user interface, important behaviors, algorithmic design, data structure design, access design, plus the interactions between the application and other components of the solution. User experience (UX) design has become increasingly important as pads and other mobile platforms have become ubiquitous. As a result, users’ expectations of interface behavior and intuitiveness have risen considerably. The interface is only a part of the UX, though. A host of other features, such as overall usability and suitability play important parts.

One of the foremost experts in this area, Don Norman, explains: “The first requirement for an exemplary user experience is to meet the exact needs of the customer, without fuss or bother. Next comes simplicity and elegance that produce products that are a joy to own, a joy to use. True user experience goes far beyond giving customers what they say they want, or providing checklist features. In order to achieve high-quality user experience in a company's offerings there must be a seamless merging of the services of multiple disciplines, including engineering, marketing, graphical and industrial design, and interface design.” (https://www.nngroup.com/articles/definition-user-experience/)

5.1.1 Software Framework Selection

A software framework is a universal, reusable software development environment that provides functionality that facilitates the development of software solutions. Frameworks often include code compilers, code libraries, programming tools, application programming interfaces (APIs), and code configuration tools among other tools. Why use a framework? Because it leverages the work of many other programmers. Frameworks are often a tremendous source of tested, highly-functional algorithms, data structures, and code infrastructure. Solution construction can be faster, more efficient, and more reliable (and obviate a lot of drudge work).

There are many frameworks available. Some are proprietary, some are FLOSS (Free/Libre and Open Source Software). Usually they are based on a particular programming language (such as Java, C++, C#, PHP, XML, Ruby, and Python) and application environment (client, web, server, or mixed environments). Some of the more popular frameworks include:

- GWT —Google Web Toolkit. Functionality includes extensive interaction with Ajax, and you can simply write your front end in Java and the GWT compiler converts your Java classes to browser-compliant JavaScript and HTML. Also includes interaction with the newly released Google Gears for creating applications available offline.

- Spring Java Application Framework — This framework can run on any J2EE server. It has a multilayers architecture with an extensive API.

- Apache Cocoon — Specifically supports component-based development with a strong emphasis on working with XML and then translating to other portable formats.

- Java Server Faces (JSF) — provides help to build user interfaces for Java Server applications. It helps developers construct UIs, connect UI components to an application data source, and write client-generated events to server-side event handlers.

- Symfony — Used to build robust applications for an enterprise environment. Everything can be customized. Lots of help for testing, debugging, and documenting the solution.

- Django — A Python framework that has a template system and dynamic database access API that is used often for content retrieval.

5.2 Database Design

The database design determines how stored data will be organized, stored, and retrieved. Potentially, the database design determines the type of database (e.g., hierarchical, relational, object-oriented, networked) that needs to be constructed and the database technology that will be used (e.g. Oracle, Sybase, SQL Server). The design also specifies the details of who has access to the data and when they have access and whether or not the database needs to be distributed.

There are two situations to consider:

- The new solution database stands alone

- The new solution database integrates with already operational databases

The first situation is the least complicated of the two. When the new solution database stands alone, the design team can determine the type, technology, access rules, and behaviors without much consideration of what else happens in operations. But, of course, there will likely be EIT Department standards, technologies, and methodologies that need to be followed. When the new solution data needs to be integrated with an already operational database --- and, given the ever-growing demand for interoperability, this is almost always the case — the database design and the transition plan usually become much more complicated. The construction team needs to consider:

- How much of the data that the new solution needs already exists in the database?

- Is the operational database in the right form and using the correct data types for the new solution?

- Does the database need to be restructured due to different types of database queries or reports that need to be written?

- Is it necessary to change any technologies to accommodate the functionality or the hosting of the new solution?

Planning a database integration can be extremely difficult, and it usually requires planning and writing an ETL (Extract, Transform and Load) conversion program that will move the data from one structure/technology to another. It might also then require modifications to existing applications that use the already existing databases.

Note: It is often the case that the database design fluctuates while the solution code is being written. It is difficult to completely understand how the data needs be accessed and stored until much of the code is written. Sometimes changes in an algorithm can require a structural change in the database schema or even a technology change. Another consideration is determining where certain operations will be executed, such as via stored procedures in the database or in application code. Depending on one’s choice there can be significant differences in performance, for example.

5.3 Server and Storage Design

Server design determines what functionality will be performed on general servers rather than on clients or mobile devices. There is often a good deal of discussion by the team to determine where particular functions will take place. There are many trade offs. Performing functions on the client enhance performance and security. Performing functions on a server allows for access to larger datasets and files, but may lead to latency issues. In this day, rarely is there a single server anywhere. In most instances, enterprises create or lease server farms to handle the computational load. As the demand for servers increases, so does the complexity of the server farm design. In the end, the server design team needs to determine:

- Location: Where should the servers be located? Do they need to be distributed among multiple locations?

- Redundancy: Are the servers so critical that there needs to be significant redundancy, such as multiple server farms? Or would fail-over and hot swap capabilities be sufficient?

- Computational Needs: How much computation needs to be performed on the servers? As a result, what kind of peak computational loads are expected? How does that affect the need for resources? What kind of load-balancing is needed?

- Performance: What kind of response times are required and how does that affect the number of servers needed?

- Security: What kind of security do we need for our servers?

- Reuse: Can we use any of our current servers or server farms for this purpose? If how, how will this new solution affect their performance?

Storage design consists of determining the technical requirements for how information will be stored and accessed. The things to consider are:

- Location: Where does the information need to be stored? On the client? On a server? In a public or proprietary cloud?

- Amount: How much information needs to be stored? Is it permanent or transient? And, if transient, how long does it need to be stored? Are there contractual obligations for maintaining data? Would archiving suffice?

- Performance: How fast does the storage and retrieval process need to be?

- Security: How secure must the data be?

- Redundancy: Do we need to provide data storage redundancy? If so, how transparent must the access be?

- Backup: What is the data backup plan? How much do we rely on our data redundancy versus other kinds of backup storage? How often do we back up? How long do we keep the data? What about off-site storage? Are there any escrow requirements?

- Reuse: Can we use any of our current storage systems for this new solution? If how, how will this new solution affect their performance or sizing of the existing systems?

As with the database design, it might not be totally clear what the true server and storage needs will be until the code is nearing completion; however, coming up with a conservative guess to start is required to make progress on the storage and server acquisition and implementation.

5.4 Network & Telecommunication Design

Network design needs to ensure that the network can meet the capacity and speed needs of the users and that it can grow to meet future needs. Many consider the designing process to encompass three major steps:

- The network topological design describes the components needed for the network along with their placement and how to connect them together.

- Network synthesis determines the size of the components needed to meet the performance requirements, in this case often known as grade of service (GoS). This stage uses the topology, GoS, and cost of transmission to calculate a routing plan and the size of the components.

- The Network realization stage determines how to meet the capacity needs for the solution and how to ensure the reliability of the network. Using all the information related to costs, reliability, and user needs, this planning process calculates the physical circuit plan.

The Network & Telecommunication plans and design not only contain topological diagrams of equipment and wiring, they also must cover:

- Information about equipment delivery and customization and/or fabrication

- Plans to test reliability and quality of network gear and services.

- Plans for IP address management for network VLANs and subnets

- Firewall locations and settings

- Methods to monitor and manage network traffic

- Network optimization to enable delivery of services over geographically dispersed areas of an enterprise

- Policies and procedures to keep the entire network and subnets working as designed

5.5 EIT Infrastructure Support Design and Planning

EIT Infrastructure comprises those systems, software, and services that are used to support the organization. They are not specifically for one project or solution. They are used by most (if not all) projects and solutions. The infrastructure services as a foundation for both the construction of solutions as well as the operation of solutions. It is rare that the EIT organization creates the infrastructure components. Nearly all come from third-party vendors. Still the EIT organization needs to select, integrate, operate, and maintain the components in the context of their operations. For detailed information about how to work with vendors to acquire components, see the section on Acquisition. [Insert link here]

Although there is some disagreement as to the exact list of EIT infrastructure components, the following types of components are typical:

- Application development tools and services — There are a myriad of items that belong in this list from software development platforms, platform middleware, integration-enabling software, testing harnesses, and configuration management tools. All these tools help independently designed and constructed applications to work together. They also increase the productivity of the engineering staff.

- Information management software tools and services — The components in this areas include data integration tools, data quality tools, database management systems.

- Storage management software and services — This group includes all software products that help manage and protect devices and data. These tools manage capacity, performance and availability of data stored on all devices including disks, tapes, optical devices, and the networking devices that handle data.

- IT operations management tools and services — This includes all the tools required to manage the provisioning, capacity, performance and availability of the computing, networking, and application environment.

- Security management tools and services — These tools and services test, control, and monitor access to internal and external EIT resources.

- Operating systems and other software services — The base level operating systems that run on all devices need to be selected and maintained. OSs manage a computer’s resources, control the flow of information to and from a main processor, do memory management, display and peripheral device control, networking and file management, and resource allocation between software and system components. Beyond operating systems, other infrastructure software such as clustering and remote control software, directory servers, OS tools, Java servers, mainframe infrastructure, and mobile and wireless infrastructures are usually required.

5.6 Desired Technical Design Qualities

When creating a technical design, the team should do their best to build in the following qualities to all the components, as much as possible:

- Compatibility — The software and hardware is able to operate with other solutions that are designed to interoperate with another solution. For example, a piece of software should be backward-compatible with an older version of itself.

- Extensibility — New capabilities can be added to the software without major changes to the underlying architecture.

- Fault-tolerance — The software is resistant to and able to recover from component failure. The design should be structured to degrade gently, even when aberrant data, events, or operating conditions are encountered. Well- designed software should never "bomb." It should be designed to accommodate unusual circumstances, and if it must terminate processing, do so in a graceful manner.

- Integrability — The design should be consistent. A consistent design appears as if one person developed the entire thing; the components need to be totally compatible. In integrable design uses a uniform set of coding styles and conventions. In addition, great care is taken to define the interfaces between design components so that integration does not introduce hundreds of bugs that are hard to track down.

- Maintainability — A measure of how easily bug fixes or functional modifications can be accomplished. High maintainability can be the product of modularity and extensibility.

- Modularity — the resulting software comprises well defined, independent components which leads to better maintainability. The components could be then implemented and tested in isolation before being integrated to form a desired software system. This allows division of work in a software development project.

- Reliability — The software is able to perform a required function under stated conditions for a specified period of time.

- Reusability — the software components (units of code) are clean enough in design and function to be included in the software reuse library.

- Robustness — The software is able to operate under stress or tolerate unpredictable or invalid input. For example, it can be designed with a resilience to low memory conditions.

- Security — The software is able to withstand hostile acts and influences. All corporate and personal data is secure.

- Scalability —The solution can be easily expanded, as needed. Documentation about how to add resources to the must be available. Hardware and virtualization designs can make this quality available; however, there needs to be a well-trained individual assigned to know when and how to scale the solution.

- Usability — The software user interface must be usable for its target users. Default values for the parameters must be chosen so that they are a good choice for the majority of the users. [5] Performance — The software performs its tasks within a user-acceptable time. The solution only consumes a reasonable amount of resources, whether they be power, memory, disk space, network bandwidth, or other.

- Portability — The solution can be moved to different environments, if necessary.

- Scalability — The software adapts well to increasing data or number of users.

- Traceability — Because a single element of the design model often traces to multiple requirements, it is necessary to have a means for tracking how requirements have been satisfied by the design model.

- Uniformity — A system design that resembles a Frankenstein monster is often hard to code, debug integrate, and maintain. A uniform design helps with all of these factors.

- Well-Documented — The Developer Insights Report, published by Application Developers Alliance, points out that the one of the top reasons that development projects fail is because of changing and poorly documented requirements. This also includes the technical design phase.

6 Planning and Managing the Development

In EIT organizations, Planning is a process that continues throughout the life cycle of a solution. The most critical planning often occurs during the construction phase. It is also often the most difficult plan to create. One creates the plan to include the physical/technical design activities, but the planning process continues as the team puts together detailed plans and estimates for the actual construction elements in the schedule, whether they be chunks of calendar time, sprints, feature deliveries, or the like. These plans must include time for developing and executing tests (including allowing the developers sufficient time to test their code), for continuous integration and bug fixing.

Why is it so difficult? Because there are usually sections of the construction that have never been tackled before, and the task durations and personnel needs are just good guesses. For example, algorithms that were designed in the technical design tasks might not pan out when they are constructed and tested, and thereby require additional time to refine and recode. Since not all developers have equal capabilities, log jams may develop along the critical path. People may not be sufficiently skillful at using the selected toolset. People might even get sick and have to take time off!

6.1 Construction Plan Contents

What the construction plan will looks like and how it is constructed depends a great deal on the development methodology used by the construction team. If the team uses a waterfall methodology, they will perform a huge amount of the planning up front and will fill out and refine the plans for the detailed implementation tasks when the technical design is complete. If the team is using a rapid prototyping approach, the team will construct a high-level plan up front for the entire project that will be divided into phases. Right before each phase, the team will create a detail plan for the upcoming phase. (Refer to the Project Management Body of Knowledge (PMBOK) for detailed information about project planning.)

Best practices for creating useful and durable construction plans include:

- Create a detailed plan with reasonable estimates for all resources (human, facilities, HW, & SW), costs, and schedule

- Create tasks/subtasks that are relatively short in duration and well understood.

- Understand and document the interdependencies between tasks and subtasks.

- Specify the models, language, hardware, and software tools that will be used during each task.

- Specify critical measurements with each task, such as time, quality level, tests to pass, and performance measures that need to be met.

- Make time for building in the attributes that you need to have in your solution, such as levels of quality, security, and integrability.

6.2 Regulatory and Policy Considerations

Twenty years ago, EIT departments could set their own standards and policies for their code; however, in today’s world there are numerous governmental and industry regulations that need to be met, such as data center standards, safety standards, audit and financial control regulations, as well as security and data privacy regulations. And remember, this is in addition to whatever policies the enterprise has. Now even though these constraints should be highlighted in the requirements used by the construction team, the team must understand all of these policies and regulations, and make sure that the plan accounts for meeting all regulatory and policy requirements.

Some of the most pervasive regulations and policies are common to the areas below:

- Data Center Standards — There are a number of regulations and standards associated with the area of building and data center codes. From floor to ceiling tiles, air flow to electrical requirements this area is vast and requires engineering staff who know the area well. This includes ISO/IEC 24764:2010(E) [6] and Statement on Auditing Standards No. 70 (SAS 70) [7].

- Safety Standards — Safety is critically important. Many of the construction phases best practices and safety codes (e.g. ANSI/ISA 71.04-2013) are rooted in past incidents, and they usually require knowledgeable staff or contractors to sure that they are begin followed.

- Audit and Financial Controls — Financial and personnel reporting regulations (e.g., Sarbanes-Oxley Act, SOX compliance) belong to an area that has come under significant scrutiny since 2000. If the solution deals in these areas, individuals with this expertise need to be part of the team.

- Security and Data Privacy — Regulations associated with security and data privacy are growing at a significant rate. The team needs to understand local, state, national, and international laws these days. See Chapter 5 Security for more details.

6.3 Most Common Construction Planning Mistakes

Unfortunately, the construction plans often don’t resemble what actually occurs during the project. Some of the differences between “planned” and “actual” are expected; however, often teams don’t do a very good job of planning. The most common development planning mistakes are:

- Not anticipating or managing changes to scope.

- Not planning time for quality, security, or other non-functional requirements.

- Not leaving time for testing and fixing bugs.

- Not planning in holidays, vacations, and illness.

- Not planning in non-delivery or slow-delivery by vendors.

6.4 Critical Project Management Activities

Many EIT groups do not carefully track the progress of their projects. It is a huge mistake that can end up causing a project to balloon into 200% or more times its original length or cost. For the most part, construction phase project management is just like project management for any other project. However, there are a few aspects that are a bit unique, and critical to execute well to keep the project under control.

6.4.1 Taking Measurements During Construction

When you are building a kitchen, it is fairly easy to understand how much of the project you have completed and how much you have left to do. Understanding the project is visual and relatively easy. This is not the case with most EIT projects, especially when the software components are being created. And, as mentioned above, a project can get out of control very quickly. As a result, it is critical to constantly take measurements that can provide information about how much progress is being made, or how much trouble the project is in. There are two types of measurements that can be taken, direct ones and indirect ones.

Direct measurements are those that can be measured or counted exactly and directly. They include things such as costs of components, amount of effort/resources consumed, lines of code written, the number of tasks or features completed, the speed of the solution’s response, the number of tests being performed on the solution, and the number of outstanding bugs. Indirect measurements are those things that are much harder to quantify, such as the quality, complexity, stability, or maintainability of the product; however, one can often use direct measurements to get some idea of the indirect measurements.

In general, project managers need to focus on direct measurements. They need to take them frequently and they can hopefully use them to understand how well the project is progressing or when it is in trouble. Identifying the need for course corrections early always saves time and money.

6.4.2 Managing Other Parties

Usually, in EIT projects, it is as important to manage non-team parties such as stakeholders, procurement groups, and vendors, as it is to manage the construction team. These “outside” individuals or groups can totally derail a project. Managing them usually requires excellent communication skills, patience, diplomacy, and a strong managing hand. Managing third parties is an activity needs to be continuous from the beginning to the end of the project.

6.4.3 Change Request Management

Whether your project is building a kitchen or building a CRM solution, requests for changes are going to be made to the requirements and design of the solution during the construction phase. Some issues and interdependencies can’t be identified until the team is down in the bits coding basic functionality. You also can’t see some problems with the interface design or flow of the application until the beta-testers are let loose on the system.

So, change is going to happen. They management skill that is required for all projects is to first be able to understand whether a change is critical or non-critical, and then to be able to convince proposers that non-critical changes must wait for a future development phase. There are many techniques for keeping the user community under control, but the most useful is to show them how much the change is going to cost and how long it will slow down the delivery of the system.

In any case, having a robust change request management system is critical and it should

- Define a change request carefully and completely

- Document the business case for the change

- Document who requested the change and whether all concerned parties agree that it is important

- Document costs, potential return on investment, assumptions, and risks

- Get approval for making the change to the plan from management (usually a CAB)

7 Developing the Application

Although application development is a relative mature area of EIT, the languages, and tools are constantly in flux. Typically, each EIT department will standardize on their particular set of programming languages, development tools, programming standards, and development methodologies. Rarely will a development team be allowed to bring in new construction technology without justification.

Lots of information on software development can be found in the IEEE-CS SWEBOK Chapter 3 Software Construction [12]. It is important to know that there are a number of options for construction a solution from purchasing packaged solution components that can be used just out of the packages (often termed a commercial off-the-shelf [COTS] solution) to purchasing solution components that need to be customized, to a component that is built completely in house.

7.1 Solution Development Options

There are now many options for developing an application with respect to how many components EIT department implements or customizes versus how much of the solution is purchased from a vendor.

- “Roll Your Own” Solution. No matter what solution component you are talking about, when the enterprise takes on the development of the solution, it is taking on a good deal of responsibility for creating and maintaining the solution. It also takes on the responsibility for mastering and keeping up with all the technology and skills involved with developing the solution. In some instances, this commitment makes sense. In others, it does not. Each component of each solution needs to be reviewed with respect to the best method for constructing the component.

- Hiring third-party developers. Some times the expertise or amount of resources necessary are not available in house. In this case, people hire outside developers to create all or part of the solution. This option, in many situations, is the hardest. The enterprise ends up shouldering the cost of creating and maintaining the solution throughout its lifecycle, often through the vendor that originally developed the solution component. In addition, managing outside resources to create the correct things is almost always harder than managing your in-house staff. But sometimes, you don’t have another option.

- Customized packaged software. Many vendors provide software or hardware components that have most of the functionality that an enterprise needs, but they allow for customizations that accommodate critical business processes or policies. Customizations can be performed by the vendor, and vendors often provide tools (such as APIs) to enable settings to be changed, or customized modules to be developed and added to work with the rest of the software package. Again, this lowers the development and maintenance costs greatly over creating your own solution components.

- Off-the-shelf solution components. The cheapest way to provide a solution is to purchase off-the-shelf software and hardware components, whenever they are available. Not only is the purchase price generally much cheaper than any other option, typically, so is the maintenance. The vendor can amortize its efforts to maintain the code over many customers; the EIT department can’t.

- Cloud-based solution components. When we think of service provided in the “cloud,” we most often think of software or storage services. The fact it, there are a whole stack of services now being provided in the cloud:

- Infrastructure as a Service (IaaS) — This is the most basic cloud-service model that provides machines, servers, storage, network services, and even load balancing. IaaS can be provided as either standard Internet access or as virtual private networks.

- Platform as a Service (PaaS) — This cloud layer provides platforms whether they be execution runtimes, databases, web servers, or development tools. The customer typically does not manage any of the infrastructure for this layer, but it does have some control over which platforms are available and other settings.

- Software as a Service (SaaS) is a method of purchasing applications that are running on a cloud infrastructure. These solutions are generally stand-alone services that meet a specific business need.

- Integration between in-house and cloud services is often complex, not stable, and require special security measures. As with the other services, SaaS can be provided through either standard Internet service or as a virtual private network.

I need to redraw this picture and make it more of what we need.

It is important to note that despite the fact that a vendor is providing a service, due diligence is still required to ensure that contracts between the two parties specify exactly what the enterprise requires from the cloud provider in terms of service, performance, security, and other measures. The enterprise must understand that using cloud applications does not transfer responsibility for data and security to the cloud vendor; EIT management is still responsible for ensuring that things such as privacy and regulation controls are being met by the cloud service.

7.2 Hardware and Software Infrastructure

Writing the software for an application is only a small part of what needs to get constructed with an EIT solution. Remember that the solution will likely require hardware infrastructure and software infrastructure (middleware) components:

| Hardware Infrastructure | Software Infrastructure |

|---|---|

|

|

7.3 Software Construction

Numerous approaches have been created to manage the development of software, some of which emphasize the construction phase more than others. These are often referred to as software development lifecycle models, or, more properly perhaps, software development methodologies.

Schedule (or predictive) models are more linear from the construction point of view, such as the waterfall and staged-delivery life cycle models. These models treat construction as an activity which occurs only after significant prerequisite effort has been completed—including detailed requirements work, extensive design work, and detailed planning. Each defined milestone is a checkpoint for signing off on the work completed so far, and authorizing it to go ahead. It often results in “big bang” effects, as all features must go in lock step. That’s different from staged delivery of capabilities, which is often a result of incremental approaches. This approach has generally been preferred by companies providing outsourced systems integration work.

Other models, usually termed iterative, are less demanding of big upfront scheduling precision, working to refine schedules as each iteration completes. They are comprised of sequences of relatively short periods of development, each such cycle using (iterating) the same sequence of steps to refine a selected set of requirements, code, build, and test the results. Some iterations may deliver working increments of the total solution; others may focus on solving specific problems, like getting the architecture right up front. Some examples are evolutionary prototyping, Dynamic systems development method (DSDM), Feature Driven Development , Test Driven Development, and Scrum. These models tend to develop by feature, so individual features may be at different stages, some already being coded or tested, while others are still being designed.

For more information, see Section <click here>>.

7.3.1 Developing and Using Best Coding Practices

Coding practices are informal rules that EIT organizations adopt that help increase the quality of the software components. These best practices are designed to increase the “abilities” of the code, especially reliability, efficiency, usability and maintainability.

Best Coding Practices usually include:

- Coding conventions

- Code commenting standards

- Naming conventions

- Portability standards

- Security standards

- Modularity practices

7.3.2 Performance Tuning & Code Optimization

In some situations, going back and “tuning” the code to increase its performance or decrease its storage usage is critical. Although, it isn’t always necessary, the team should include some time for code tuning in their schedule and then take the time to do it. If there is some anticipation that performance is a critical characteristic for the solution, then the team should anticipate that they will need to spend lot of time tuning.

It is important that one does not confuse high-performance code with short or pretty code. The saying that fewer lines are faster isn’t always right. In addition, how to go about optimizing code is different in nearly every programming language, because knowing how the compiler translates the code into machine code is an important piece of knowing how to tune. Optimization is an art form. The best optimizers have many years of experience and they know their compilers inside and out.

7.4 System and Code Integration

Often the rubber hits the road when the integration process starts. Most of us think of integration as putting software modules together, or setting up a data center. The problem is that integration includes both of those activities and several more. The more complex the EIT solution, the more important it is to use a configuration management system. In addition, if the new solution needs to be integrated with pre-existing system the complexity increases even more unless interfaces are clearly defined and maintained as “contracts” between systems.

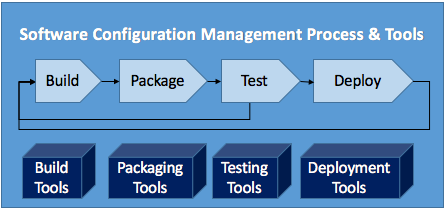

The configuration management process consists of four basic steps: building, packaging, testing, and deployment. There are numerous tools that are available to help with each step in the process. For example, common tools used to build solutions are make and Ant. Packing tools provide help with installing, upgrading, configuring, and deinstalling software. Testing tools help run automated tests on the builds (see Testing, Verifying, and Validating the Solution for more information). Deployment tools automate and regulate the deployment of software enabling it to be used. This includes the release, install, activation, version tracking, and uninstalling processes.

Figure 3: Software Configuration Management Process and Tools

Many configuration management experts believe that the configuration management process needs to be continuous, not something that happens a couple times during the construction process and then at the end. A developer should know nearly instantaneously whether recent changes will cause issues with the rest of the solution. These fast builds are often referred to as "pre-flight" builds, because they are performed before the code is checked in. An excellent paper on continuous integration by Martin Fowler titled Development: Five Steps to Continuous Integration suggests that:

"Continuous Integration is a software development practice where members of a team integrate their work frequently; usually each person integrates at least daily — leading to multiple integrations per day. Each integration is verified by an automated build (including test) to detect integration errors as quickly as possible."

Integration needs to be carefully planned and even more carefully executed. Communications at every interface need to be anticipated and designed, and the design must be followed during construction. All the following integration points need to be considered:

- Between modules within a code base

- Third-party software modules or applications with enterprise-developed or existing software.

- Software interactions with existing clients, servers, databases

- Software and hardware interactions with existing networks

- New solution components with existing security measures

IT systems are often very complex and they have many integration and data transfer points. Integrating new services into existing environments requires a good deal of knowledge of different technologies, processes, and policies and their interactions.

7.5 Documenting The Solution

Most developers hate to document their work, preferring getting the job done to writing about it; however, solution documentation is critical. Each layer of the solution needs to be documented, and the documentation required depends on what layer you need to document.

- For the hardware layer, the documents should discuss the specs of the hardware, how they are organized, special settings, special customizations, and required maintenance.

- Documentation for the software infrastructure layer needs to describe each component and how it is being used. Any policies and procedures around using the infrastructure need to be described. Any customizations or usage assumptions need to be described.

- Database documentation needs to describe the database as a whole, but even more important is that it must be like a dictionary. It needs to describe every field, what it is used for, what its format it, and which applications of components use or set the data.

- Software documentation is critical for maintenance of the code, both finding/fixing bugs and extending the code in the future. According to The Developer Insights Report (http://www.appdevelopersalliance.org/dev-insights-report-2015-media) published by Application Developers Alliance, one of the top ten developers frustration is maintaining poorly documented code as it takes significantly more time than maintaining well-documented code.

But what is well-documented code? It should describe what the code does, but more importantly, it needs to describe why it does what it does. Good documentation enhances the readability and transparency. It should be written for people who don’t know the code already and who don’t have time to read all the code to figure out what this piece does and why it’s done here.

Beyond the inline code comments, other documents about the code base as a whole should be written. Often, explanatory blocks are written a the head of each section of code. Documentation should explain

- Data structures

- Important algorithms

- Communication conventions between modules

- Critical assumptions made in the coding process

Most experienced developers believe that the time that they spend writing documentation saves them or someone else even more time in the future, and if the code is being altered, understanding the code prevents people from butchering the original code. It really is worth it.

8 Testing, Verifying, and Validating the Solution

The Construction team includes those who will test the solution. Together, developers and testers not only construct the mechanisms and processes for testing the solution, as well as the test cases, verifying that the solution meets all the requirements, and validating that the solution indeed does match the needs of the users. Depending on the solution, these mechanisms and processes can be quite simple, or they can be extensive and complicated.

8.1 Build Testing Suites

Testing must be done on all software and hardware constructed or used for the solution. Creating automatic and manual test suites can involve a number of techniques. Here are a few of the most common.

8.1.1 Solution Regression Testing

Regression Testing consists of using an established set of tests to ensure that the solution behaves as designed throughout any changes. Obtaining the same results, without introducing errors, verifies that the solution continues to perform correctly and consistently despite ongoing changes to the code base, database, and hardware throughout the construction process. Regression testing is usually performed on a regular basis, typically after each new application build is generated or new hardware/firmware is installed.

Regression testing is used with many of the other techniques listed below.

8.1.2 Software Test Harnesses

Test harnesses automate the testing process. They consist of an execution engine and a set of test scripts that can be run to test the entire solution or just a specific part of the solution. They can also consist of custom code that the testing team runs to exercise the solution in particular ways.

The results from the tests are collected and compared to expected results. Test harnesses provide the following benefits over manual testing:

- Increased productivity of staff

- Insurance that regression testing can occur on a regular basis, even overnight

- Accuracy and exact repeatability of tests

- Ability to set up and test uncommon conditions

8.1.3 Software Unit Testing

Unit tests run through small units of code to make sure that they work as designed. A unit can be as small as a single function, or it can consist of a set of functions or modules, maybe even an entire library. In most cases, unit tests are run automatically; however, in some cases, the tests must be run manually using a script that tells a person how to perform the test.

Unit testing has a couple of advantages:

- Issues/bugs are easier to find and fix when the tests are confined to small amounts of code.

- A developer can test his/her code thoroughly before integrating it with the rest of the solution. The result is usually a faster and smoother integration.

Unit testing is often a part of the regression testing suite.

8.1.4 Software and Product Integration testing

Software integration testing occurs continually, as new code or changes to old code are added to the builds. Product integration testing adds a couple of the solution components together, tests, and then adds a few more pieces. Eventually, all hardware, software, and communications should be tested together. This process is typically known as integration testing (or integration & testing) even though software integration testing has been occurring all along. Product integration testing allows the testing team to

- Test the interactions between components

- Test for unanticipated performance issues

There are several methods used with both types of integration testing:

- Bottom-up testing integrates the lowest level components first and when considered stable, tests high-level components. This technique is good when the lowest level components are done first and at about the same time. It is excellent as bugs are quite easily found.

- Top-down testing starts with the top integrated modules and tests branches of the modules step-by-step until the end of the related module is found. This technique allows the team to find missing branches.

- Sandwich testing is an approach that combines bottom-up and top-down testing.

- Risky module testing is an integration test that starts with the riskiest or hardest software module first.

- End-to-end testing tests the flow of an application from start to finish, even if all the functionality in the flow is not complete. The benefit of this kind of testing is that it identifies system dependencies and tests to see if the right information is passed between the different components of the solution. It can be performed at a very early stage of the development and can save huge amounts of time when issues are found early in the construction process.

- Big Bang, Usage Model, or Production testing (used in both hardware and software testing) runs user-like workloads in integrated environments that mimic the eventual environment (often called staging environments). This type of testing not only tests the software, but it also tests the environment. The downside of this type of testing is that it optimistically assumes that each of the integrated units are going to behave as planned. So, although it is more efficient, if there are issues, they might be tougher to find and fix.

- Parallel Testing is often included in Production testing when replacing critical systems, such as payroll or ERP systems. In this type of testing, the new system is run in parallel with the existing system to make sure that the new system performs as expected, with no less functionality and no greater errors. It is not expected that all results will be the same, since the new system is expected to have more/better functionality than the old one.

- Production testing can uncover the need for data center upgrades or enhancements before the solution goes live, which for obvious reasons is preferred. It can also uncover the need for workstation configuration changes or workstations application incompatibilities.

8.1.5 Alpha & Beta Tests

Alpha and beta tests take integration one step further — they get the users involved. In some situations, alpha testing begins when the solution is turned over to an independent group for testing (as is typical in software product companies). In EIT, Alpha tests are used to find issues with the solution at an early stage when the solution is nearly fully usable — at least there should be significant functionality to test, and the user should be able to complete significant activities. For alpha tests, the user might be an engineer or someone from the testing group. In the best case, there should be one or two users from the eventual user community involved with this testing. Future users will often find errors or problems with workflow that a developer or engineer won’t. Alpha tests can last for months, as functionality is completed and refined.

Beta tests are used to ensure readiness for the solution release. They should occur when the team is pretty sure that the solution is close to ready for transition to the Production environment (see Transition). The beta test often only lasts for a few weeks. Beta tests not only test the integration of the system in a user environment doing user-like activities, they also test that the system works well for the users. Beta tests help identify:

- Hard to find bugs

- Mismatches with the user’s workflow

- User interface issues

- Performance issues

- Ambiguity or lack of clarity with instructions or the interface in general

8.1.6 Security Testing — Vulnerability Scans and Remediation

Twenty years ago, security testing consisted of making sure that the user authentication scheme was working as intended. Now, security testing, especially for web-based applications, requires careful designing and planning. Companies often have outside organizations attempt to “break in” to the applications to ensure their security design. In any case, this part of the testing process is now critically important and a significant part of the testing process. It also can’t be left to the very end. For more information, see Security. <<link to Security chapter.>>

8.2 Verifying

The solution verification process runs parallel to the testing process. It doesn’t test for minor bugs in the engineering; it instead it checks to make sure that the solution correctly reflects the technical specification and are they following coding or other implementation standards. In essence, is the construction team building the solution right?

Verification includes activities such as requirements/code reviews, code inspections, and code walkthroughs. All these activities are systematic reviews of computer source code that is intended to find mistakes made early in the coding phase to improve the overall quality of software.

8.3 Validation

Equally important to Testing and Verifying, the solution needs to be validated. These reviews make sure that the construction team is building the right thing. The validation team needs to make sure that the solution correctly reflects the logical design and meets all business requirements. Most important, the validation needs to make sure that it meets customers’ needs.

Alpha and beta tests are usually good ways to make sure that the solution is meeting the customers’ needs. Just verifying that that the solution “does what it is supposed to do” is usually not good enough. As too many developers know, the logical design doesn’t always get it right. When the users see the solution in their environment, they realize that it needs to behave differently than they thought. You can’t find these things out without testing with users.

In most organizations, the validation process ends with a signoff from the user community that indicates that they will accept the solution, as constructed.

9 Handing off the Solution

Once the Construction team has completed their testing, verification, and validation procedures then can hand the project off to the Transition Team, or if there is not a separate Transition Team, they can start the transition activities (see Transition Into Operation ). In EIT software development, best practice today is evolving toward a DevOps approach, in which developers work with the Operations and Support staff to ensure a smooth transition into production.

Connecting with those responsible for the transition to standard operations is critical. The Transition team needs to understand what has been constructed, and must be extremely familiar with the technical design. They need to check that the documentation is complete enough that they can execute the transition without constantly coming back to ask questions. Technical training should also be in place for the Transition team.

The quality of the solution must also meet the standards of the Transition Team. If they believe that the solution will not work in day-to-day operations because of quality issues, likely the Construction team will need to continue to make fixes to the solution until they are satisfied. In addition, in most environments, a robust configuration management process must be in place before the handoff. The Transition team will need those processes as they prepare the solution for the transition.

10 Summary

Today’s EIT systems are complex with many components that must come together in a harmonious way. It takes a knowledgeable EIT team with diverse knowledge of hardware, infrastructure or middleware, databases, storage devices, network computing, and application development to ensure proper construction techniques are used to deliver right-sized infrastructure and a performant application.

Integrating a new solution with existing enterprise solutions is often challenging. It definitely adds to the complexity of the construction effort and requires not only expertise with technology, but also with the existing EIT applications.

11 Key Competence Frameworks

While many large companies have defined their own sets of skills for purposes of talent management (to recruit, retain, and further develop the highest quality staff members that they can find, afford and hire), the advancement of EIT professionalism will require common definitions of EIT skills that can be used not just across enterprises, but also across countries. We have selected 3 major sources of skill definitions. While none of them is used universally, they provide a good cross-section of options.

Creating mappings between these frameworks and our chapters is challenging, because they come from different perspectives and have different goals. There is rarely a 100% correspondence between the frameworks and our chapters, and, despite careful consideration some subjectivity was used to create the mappings. Please take that in consideration as you review them.

11.1 Skills Framework for the Information Age

The Skills Framework for the Information Age (SFIA) has defined nearly 100 skills. SFIA describes 7 levels of competency which can be applied to each skill. Not all skills, however, cover all seven levels. Some reach only partially up the seven step ladder. Others are based on mastering foundational skills, and start at the fourth or fifth level of competency. It is used in nearly 200 countries, from Britain to South Africa, South America, to the Pacific Rim, to the United States. (http://www.sfia-online.org)

| Skill | Skill Description | Competency Levels |

|---|---|---|

| Animation development | The architecture, design and development of animated and interactive systems such as games and simulations. | 3 - 6 |

| Database design | The specification, design and maintenance of mechanisms for storage and access to both structured and unstructured information, in support of business information needs. | 2 - 6 |

| Hardware design | The specification and design of computing and communications equipment (such as semiconductor processors, HPC architectures and DSP and graphics processor chips), typically for integration into, or connection to an IT infrastructure or network. The identification of concepts and their translation into implementable design. The selection and integration, or design and prototyping of components. The adherence to industry standards including compatibility, security and sustainability. | 4 - 6 |

| Information content authoring | The management and application of the principles and practices of designing, creation and presentation of textual information, supported where necessary by graphical content for interactive and digital uses.

The adoption of workflow principles and definition of user roles and engagement and training of content providers. This material may be delivered electronically (for example, as collections of web pages) or otherwise. This skill includes managing the quality assurance and authoring processes for the material being produced. |

1 - 6 |

| Network design | The production of network designs and design policies, strategies, architectures and documentation, covering voice, data, text, e-mail, facsimile and image, to support strategy and business requirements for connectivity, capacity, interfacing, security, resilience, recovery, access and remote access. This may incorporate all aspects of the communications infrastructure, internal and external, mobile, public and private, Internet, Intranet and call centres. | 5 - 6 |

| Porting/software configuration | The configuration of software products into new or existing software environments/platforms | 3 - 6 |

| Programming/software development | The design, creation, testing and documenting of new and amended software components from supplied specifications in accordance with agreed development and security standards and processes. | 2 - 5 |

| Systems design | The specification and design of information systems to meet defined business needs in any public or private context, including commercial, industrial, scientific, gaming and entertainment. The identification of concepts and their translation into implementable design. The design or selection of components. The retention of compatibility with enterprise and solution architectures, and the adherence to corporate standards within constraints of cost, security and sustainability. | 2 - 6 |

| Systems development management | The management of resources in order to plan, estimate and carry out programmes of solution development work to time, budget and quality targets and in accordance with appropriate standards, methods and procedures (including secure software development).The facilitation of improvements by changing approaches and working practices, typically using recognised models, best practices, standards and methodologies. The provision of advice, assistance and leadership in improving the quality of software development, by focusing on process definition, management, repeatability and measurement. | 5 - 7 |

| Systems installation/ decommissioning | The installation, testing, implementation or decommissioning and removal of cabling, wiring, equipment, hardware and associated software, following plans and instructions and in accordance with agreed standards. The testing of hardware and software components, resolution of malfunctions, and recording of results. The reporting of details of hardware and software installed so that configuration management records can be updated. | 1 - 5 |

| Systems integration | The incremental and logical integration and testing of components and/or subsystems and their interfaces in order to create operational services. | 2 - 6 |

| Testing | The planning, design, management, execution and reporting of tests, using appropriate testing tools and techniques and conforming to agreed process standards and industry specific regulations. The purpose of testing is to ensure that new and amended systems, configurations, packages, or services, together with any interfaces, perform as specified (including security requirements) , and that the risks associated with deployment are adequately understood and documented. Testing includes the process of engineering, using and maintaining testware (test cases, test scripts, test reports, test plans, etc) to measure and improve the quality of the software being tested. | 1 - 6 |

| User experience design | The iterative development of user tasks, interaction and interfaces to meet user requirements, considering the whole user experience. Refinement of design solutions in response to user-centred evaluation and feedback and communication of the design to those responsible for implementation. | 2 - 6 |

11.2 European Competency Framework

The European Union’s European e-Competence Framework (e-CF) has 40 competences and is used by a large number of companies, qualification providers and others in public and private sectors across the EU. It uses five levels of competence proficiency (e-1 to e-5). No competence is subject to all five levels.

The e-CF is published and legally owned by CEN, the European Committee for Standardization, and its National Member Bodies (www.cen.eu). Its creation and maintenance has been co-financed and politically supported by the European Commission, in particular, DG (Directorate General) Enterprise and Industry, with contributions from the EU ICT multi-stakeholder community, to support competitiveness, innovation, and job creation in European industry. The Commission works on a number of initiatives to boost ICT skills in the workforce. Version 1.0 to 3.0 were published as CEN Workshop Agreements (CWA). The e-CF 3.0 CWA 16234-1 was published as an official European Norm (EN), EN 16234-1. For complete information, please see http://www.ecompetences.eu.

| e-CF Dimension 2 | e-CF Dimension 3 |

|---|---|

| A.5. Architecture Design (PLAN) Specifies, refines, updates and makes available a formal approach to implement solutions, necessary to develop and operate the IS architecture. Identifies change requirements and the components involved: hardware, software, applications, processes, information and technology platform. Takes into account interoperability, scalability, usability and security. Maintains alignment between business evolution and technology developments. |

Level 3-5 |

| A.6. Application Design (PLAN) Analyses, specifies, updates and makes available a model to implement applications in accordance with IS policy and user/customer needs. Selects appropriate technical options for application design, optimising the balance between cost and quality. Designs data structures and builds system structure models according to analysis results through modeling languages. Ensures that all aspects take account of interoperability, usability and security. Identifies a common reference framework to validate the models with representative users, based upon development models (e.g. iterative approach). |

Level 1-3 |

| B.1. Application Development (BUILD) Interprets the application design to develop a suitable application in accordance with customer needs. Adapts existing solutions by e.g. porting an application to another operating system. Codes, debugs, tests and documents and communicates product development stages. Selects appropriate technical options for development such as reusing, improving or reconfiguration of existing components. Optimises efficiency, cost and quality. Validates results with user representatives, integrates and commissions the overall solution. |

Level 1-3 |

| B.2. Component Integration (BUILD) Integrates hardware, software or sub-system components into an existing or a new system. Complies with established processes and procedures such as, configuration management and package maintenance. Takes into account the compatibility of existing and new modules to ensure system integrity, system interoperability and information security. Verifies and tests system capacity and performance and documentation of successful integration. |

Level 2-4 |