Interoperability

|

Contents

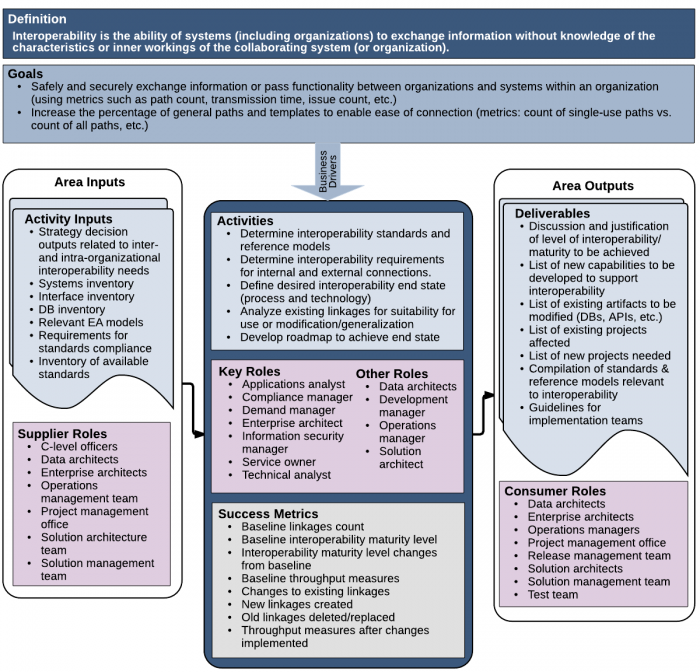

1 Introduction

The need for sharing information among information systems has grown exponentially with the adoption of automated services in the enterprise. These services have expanded from their back-office roots in accounting and personnel records to sales order management, product distribution, marketing support, business analytics in the executive suite, customer support, customer communication and relationship management, online self-service for employee benefits, and other areas.

The proliferation of software, hardware, networks, and storage systems resulted in redundancy and duplication in Enterprise information technology (EIT) assets as well as enterprise data, which meant burgeoning maintenance costs, increased reliance on service providers, and increased costs to the enterprise.

As more specialized information systems were added to support different functional areas, departmental managers realized they could gain operational efficiencies if their information was integrated. So began the great chase for information sharing that has fostered a myriad of efforts to develop technical "workarounds" to link one system to another.

The technical challenge was huge because all of these systems lacked interoperability capabilities. To achieve information sharing between systems that generate, modify, and use data, EIT had to create linkages between commercial off-the-shelf (COTS), home-grown, custom systems, and customized packages. The linkages needed to be safe, secure, accurate, and speedy. While the linkages were costly to develop, they also created nightmares of complexity, unexpected/unexplained outages, greater support costs, and other issues because they were developed in an ad hoc manner, as one-offs. This greater support effort meant that EIT staff had less time to develop new EIT capabilities for the enterprise, thus they were seen as uncooperative, and, in fact, a choke point for the enterprise.

Trends emerging today only exacerbate the problem—mobility, the cloud, and bring your own device (BYOD), to name a few. Of course, the ultimate challenge for interoperability is the Internet of Things (IoT). Big data and the IoT presuppose a powerful mechanism to collect and aggregate data from disparate sources. That is, they presuppose some degree of interoperability. Similarly, enterprises that want to move their data to the cloud expect interoperability among cloud providers.

As used in this chapter, interoperability means the ability of systems (including organizations) to exchange and use exchanged information without knowledge of the characteristics or inner workings of the collaborating systems (or organizations). This definition is based on an ISO definition: "degree to which two or more systems, products, or components can exchange information and use the information that has been exchanged." [1] Note that this definition includes hardware components as well as software systems and the organizations in which they are used.

In the enterprise, information needs to be exchanged to get a job done, or, at the highest level, to achieve a defined objective of the enterprise. When information can't be shared automatically by one system or person with another, that information is manually copied, manually updated in one or more private local versions, or otherwise re-created by the other person or system. Employees who know the data exists find a way to get it, usually through undocumented manual processes.

If it's a simple matter of producing an exact copy of the original information, there is still an extra cost to do so. If the information must be re-developed, additional costs are incurred. In either case, if the information is ever needed again (and it almost always is), then the cost of duplicating information continues. Worse, it can lead to errors. For example, if information is changed in one place, it should be changed in the copy—assuming that both of the information owners know about the change.

2 Goals and Guiding Principles

An enterprise should have the following goals for interoperability:

- Safely and securely exchange information or pass functionality between organizations and systems within an organization (with metrics such as path count, transmission time, and issue count).

- Increase the percentage of general paths and templates to enable ease of connection.

The actions that address these goals should also

- Minimize replication—Link data rather than copy it.

- Minimize steps in data flows—Create the shortest paths between the data source and the data target.

- Minimize human effort—Share data automatically.

- Minimize data interfaces—Create interfaces that are flexible or generic enough to suit multiple uses.

- Maintain modularity.

These principles for interoperability design require an emphasis on simplicity:

- Data is the enterprise's most valuable asset and must be protected accordingly. Protect privacy and security in all aspects of interoperability.

- Data usage and maintenance must be carefully governed by a well-understood data governance framework.

- Standard data definitions are fundamental to data exchange.

- Enterprise architecture is a powerful enabler of efficacious interoperability.

- Interoperability is a property of a system (a system that can share with another system and use another system's data) but its value is as a property of a system of systems (systems sharing data with each other).

- Consider the current environment; build upon existing infrastructure, but build for scalability and safe access.

3 Context Diagram

4 Description

4.1 Barriers to Interoperability

Before selecting an approach to interoperability, it is important to have a good idea of the potential challenges. The challenges to be overcome to achieve interoperability are relatively daunting, and reach far beyond just technology. They include issues such as:

- Inconsistent adoption of standards, both formal and de facto

- Incompatible data models and semantics in existing data sources

- Incompatible vendor offerings

- Legacy technologies and databases

- Differing terminologies (different referents for the same term, such as homography and polysemy)

- Differing content formats (such as data, content, maps, and media)

This chapter discusses various approaches for overcoming these barriers to achieve the level of interoperability required by an enterprise. We can learn an important lesson from the fact that that the earliest designers and implementers of interoperable automated systems were in manufacturing. "Integration in Manufacturing (IiM) is the first systemic paradigm to organize humans and machines as a whole system, not only at the field level, but also at the management and corporate levels, to produce an integrated and interoperable enterprise system." [2] In other words, manufacturing systems approached the problem of interoperability as a problem of putting together a system of systems, all of which had to share data.

4.2 Establishing Appropriate Interoperability in EIT Systems

Clearly, making systems interoperable can mean many things. The strongest drive for interoperability is technical interoperability—the technical problem of sharing information that already exists in different systems from different times and places by enabling sharing, or at least providing connected technical services.

Therefore, it is imperative to develop the big picture of what data the enterprise needs to share, to receive as incoming data and to send to other systems. Both end points may reside within the enterprise, or some may reside in external enterprises. In either case, the appropriate security of the shared data must be determined, along with other quality attributes like speed of transfer and reliability. Thus, interoperability is just one more attribute of systems of systems.

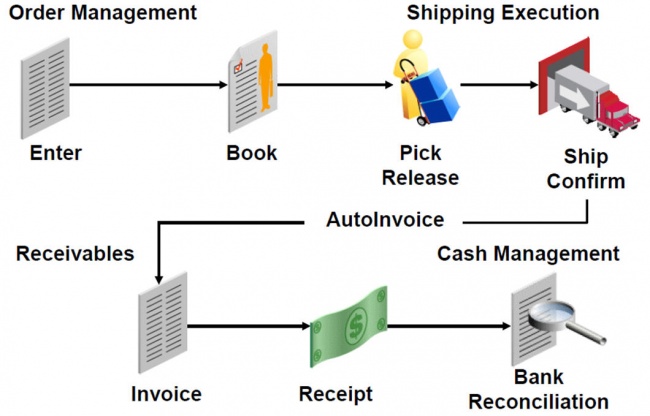

One of the most common functions within an enterprise that requires data sharing is order management, which includes selling goods, delivering them, and collecting payment. Order management covers the lifecycle of an order from the placing of the order until the order has been delivered and paid for. This is called the order-to-cash lifecycle.

Figure 2. Internal Data Sharing: Order-to-Cash Lifecycle

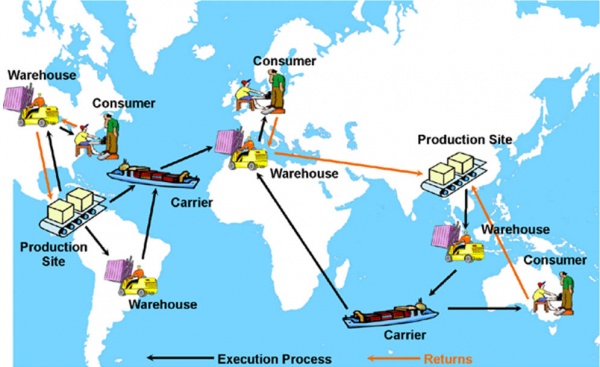

A more common example of interoperability with an external entity today is supply-chain management.

Figure 3. External Data Sharing

Most EIT organizations already have some degree of interoperability. However, most have not achieved higher levels of interoperability maturity because their approaches have evolved in an ad hoc manner as individual needs were identified. The effective use of big data and IoT requires an enterprise architecture (big picture) approach, as described in the Enterprise Architecture chapter.

An architecture team, comprised of experts from enterprise architecture, data architecture, and solution architecture, needs to collaborate in identifying existing points of interoperation (linkages) in the existing EIT infrastructure. If they exist, EA models—data models, process models, and technology landscape, for example—can be extremely useful in this exercise; otherwise, it is necessary to do some EIT archeology and create a map of the existing interoperability capabilities.

To determine the enterprise's requirements for interoperability maturity, it is necessary to understand the enterprise's overall needs for interoperability from examination of the enterprise's evolving strategic direction, including its needs for external data sharing. This examination is essential to determine the appropriate level of interoperability maturity required.

This analysis results in one or more models showing what data needs to be shared, by what EIT services and end users, and where data may be created, modified, read, used, and deleted.

Using the models from its analysis of the enterprise's emerging needs, the architecture team defines the desired end state for interoperable capabilities, which in turn dictates how mature the organization needs to become. The higher the level of complexity, the higher the level of maturity; the higher the level of maturity, the higher the level of software engineering, EIT, and IS discipline required to reach the desired state of capability, reliability, and stability.

Having defined the end state, the team must then lay out a roadmap for the organization to achieve that end state. The roadmap provides the requirements and constraints for the technical approach to be taken.

4.3 Technical Approaches to Interoperability

The roadmap laid out by the enterprise architecture analysis and planning defines proposed projects, which may include replacing old technology, building new capabilities, and engaging in process streamlining. Given the vast amounts of data that probably already exist, the team needs to examine mechanisms for sharing that data in the implementation projects. There are two basic approaches: the use of application programming interfaces (APIs) and XML as a basis for syntactic interoperability, and the use of semantic markers on data.

4.3.1 Syntactic Interoperability

Syntactic interoperability is achieved through the use of standard sets of data formats, such as in XML and SQL, file formats, and communication protocols. Syntactic interoperability does not address the meaning of transferred data; it simply gets it from one place to another intact. The Extensible Markup Language (XML) standard is maintained by the W3C organization. Using XML and carefully defined XML syntax rules for schema definitions and element representation enables the structure and meaning of data to be defined, providing an easily accessible common framework for information exchange.

The use of XML is fast becoming a standard way of enabling data to be shared across various systems. XML is an evolutionary product emerging from the Generalized Markup Language (GML) and SGML, and it is similar to HTML, but its rigorous rules for use enable it to be reliable across most systems.

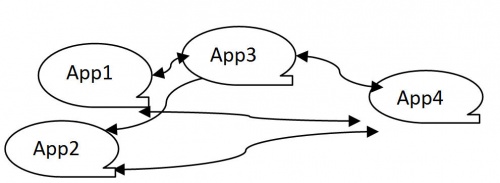

APIs are a common method of achieving syntactic interoperability. However, pair-wise APIs can be too complex to maintain.

Figure 4. API Interoperability

This maintenance difficulty is because of the huge and ever-expanding number of APIs that companies require (typically between 11 and 50), and the corresponding increase in times the APIs are called (most report between 500K and one million calls monthly). [3]

APIs are business critical, whether for consuming or providing information, and some organizations have instituted the role of API manager. Security is a major concern because APIs provide access to internal systems.

Because of the security and high traffic volume requirements, enterprises are increasing the use of API gateways to develop, integrate, and manage APIs. These gateways are the most common ways that EIT organizations use to track "end-to-end" transactions that use APIs. Cloud providers often provide tools that enable users to easily create a "front door" for applications to access data, business logic, or functionality from their back-end services.

4.3.2 Semantic Interoperability

In comparison to syntactic interoperability, semantic interoperability seeks to establish the meaning of the data items being shared. This is done by adding metadata (often manually) about the item via tagging using a predefined vocabulary of tags. The tags identify concepts. For example, HTML5 tags identify types of items on web pages.

Several attempts have been underway for more than a dozen years to develop a common ontology of meaningful primitive concepts upon which more complex concepts could be developed. The CEN/ISO EN13606 standard's Dual Model architecture creates a clear separation between what is called "information and knowledge." Information is structured via a reference for the types of entities used in the electronic health record (EHR). Knowledge is based on archetypes, such as discharge report, glucose measurement, or family history, that provide a semantic meaning to a reference model structure." [4]

Many vertical enterprise areas such as telecommunications and health care, as well as talent management in ERP systems, have established common data models, or at least common definitions of data elements, at some level. IBM's Reference Semantic Model (RSM) provides a real-world abstraction of a generic enterprise and its assets in a graphical model based on, but expanding, ISO Standard 15926 for data modeling and interoperability using the Semantic Web.

The Open Group has produced a Universal Data Element Framework (UDEF) based on ISO/IEC 11179, an international data-management standard and consistent with the data-information-knowledge-wisdom (DIKW) hierarchy model, and related DIKW standards. UDEF is a framework for categorizing, naming, and indexing enterprise data elements and provides multi-language support for UDEF taxonomies.

The World Wide Web Consortium (W3C) produces standards to support the Semantic Web, promoting common data and exchange formats. W3C is responsible for the basis of the Semantic Web: RDF+OWL. The OWL group provides resources for Web Ontology Language (OWL) including classes, properties, individuals, and data values, and these are stored as Semantic Web documents. The Resource Definition Framework (RDF) describes resources' URIs, properties, and values, as shown in the example table below.

| Feature | URI | Property | Value |

|---|---|---|---|

| Explanation | What the resource is | A particular attribute of the resource | The value of the attribute |

| Example | www.mysite.com | Author of the site (resource) | myname |

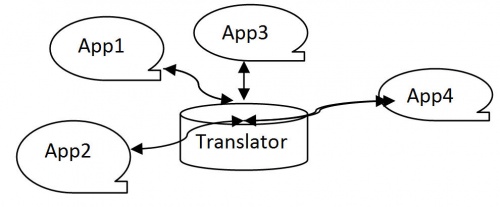

Before the advent of the Semantic Web, canonical data models were proposed to integrate separate applications and preserve the meaning of shared data. This approach requires the design of a canonical data model that is independent of the individual applications to be integrated, and serves as a translator between the apps. This additional level of indirection between the applications' individual data formats means that if a new application is added, only a transformation between the canonical data model and the new app's data has to be created, rather than a set of 1:1 transformations from one app to another.

Figure 5. API and Translator Interoperability

4.3.3 Open Standards

Many international organizations, including the IEEE and the EC, are calling for the creation and use of open standards, as opposed to proprietary standards that lock users into specific vendors. "Open standards are standards made available to the general public and are developed (or approved) and maintained via a collaborative and consensus driven process. Open standards facilitate interoperability and data exchange among different products or services and are intended for widespread adoption." [5] The use of open standards can reduce the number of APIs required to establish interoperability across different platforms and applications. In addition, open standards for semantic interoperability, like those of the W3C, enable the exchange of semantically meaningful data among users of those standards, when they've established communication layers.

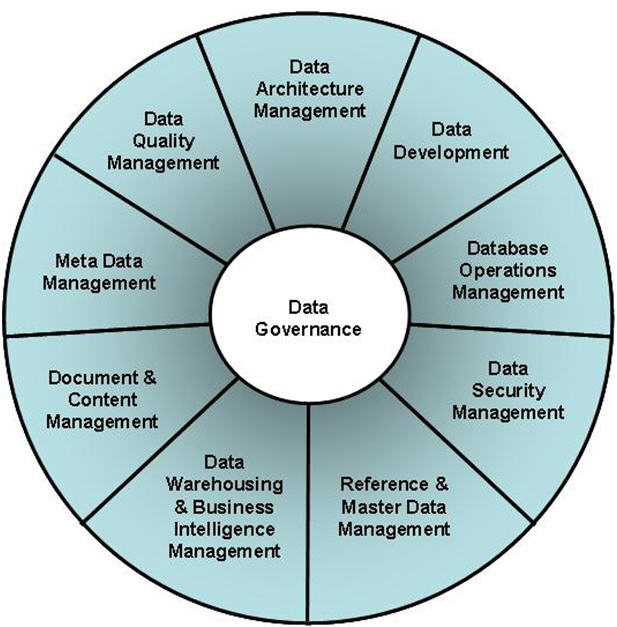

4.3.4 Data Governance

Given that interoperability is centered on the sharing of data, the discipline of data governance is essential to its successful implementation and use. The DAMA Dictionary of Data Management defines data governance as "The exercise of authority, control, and shared decision making (planning, monitoring, and enforcement) over the management of data assets." [6] Data governance is the core component of data management, tying together the other nine disciplines, as shown in the figure below.

Figure 6. Data Governance Model

As the end states of the technical and organizational aspects of an enterprise's interoperability framework are defined, it is essential that the enterprise determine how to govern data, where authority for decision making lies, how to ensure data integrity, and what monitoring and controls will be put into place.

For a full description of data management issues and controls, refer the DAMA Dictionary of Data Management and the DAMA Guide to the Data Management Body of Knowledge. [7]

4.4 Interoperability Maturity Models

Governmental and intergovernmental efforts to achieve interoperability vary greatly in scope of the parts of organizations served, and in scope of techniques used to preserve the integrity of the meaning (semantics) of the data that is shared. Fortunately, some groups have been trying to provide an engineering analysis of the scope and integration level in various interoperability solutions. Such analyses have yielded some consistency in describing levels of maturity in implementations. Several models for describing levels of interoperability have been proposed. In some cases, they are used as indicators of the maturity level of the interoperability achieved. The frameworks surveyed in the last section fall within the higher levels of maturity, as described below.

4.4.1 Levels of Conceptual Interoperability Model (LCIM)

The Levels of Conceptual Interoperability Model (LCIM) emerged from considerable research at the Virginia Modeling, Analysis and Simulation Center, which works with over a hundred industry, government, and academic members. The LCIM's seven levels of interoperability are listed in the table below.

| Level | Description |

|---|---|

| Level 0 | No interoperability |

| Level 1 – Technical | Communication protocol exists at the bits and bytes level |

| Level 2 – Syntactic | Uses a common structure, such as the data format |

| Level 3 – Semantic | Uses a common information exchange reference model, such as word meanings are the same in each system |

| Level 4 – Pragmatic | All interoperating systems are aware of each other's methods and procedures for using the data |

| Level 5 – Dynamic | State changes (including assumptions and constraints) in one system are comprehended by all interoperating systems |

| Level 6 – Conceptual | A shared meaningful abstraction of reality is achieved |

4.4.2 Levels of Information Systems Interoperability (LISI) Maturity Model

The Levels of Information Systems Interoperability (LISI) model originated in the need for military systems to interoperate. The U.S. Department of Defense's Command, Control, Computer, Communication, and Intelligence, Surveillance, and Reconnaissance (C4ISR) Integration Task Force was initiated in 1993 to address the specific data-sharing requirements of the Command, Control, Computer, Communication, and Intelligence (C4I) domain. Like the LCIM model, LISI defines levels of increasing sophistication in exchanging and sharing information.

| Level | Description |

|---|---|

| Level 0 – Isolated | Standalone systems with manual sharing (such as "sneaker net," paper copies, diskettes) |

| Level 1 – Connected | Homogenous data exchanges via electronic connection (such as emails); separate data and applications |

| Level 2 – Distributed/functional | Separate data and applications; some common functions (such as http); heterogeneous data, with common logical data models, or standard data structures dictated by the coordinating or receiving application |

| Level 3 – Integrated/domain | Shared data; separate applications (WANS); domain-based logical data models; shared or distributed data |

| Level 4 – Enterprise | Enterprise-wide shared data and applications; global information space across multiple domains |

In addition, LISI defines a common set of attributes by which sophistication is measured: PAID.

- P—Procedures for information management

- A—Applications acting on the data

- I—Infrastructure required

- D—Data to be transferred

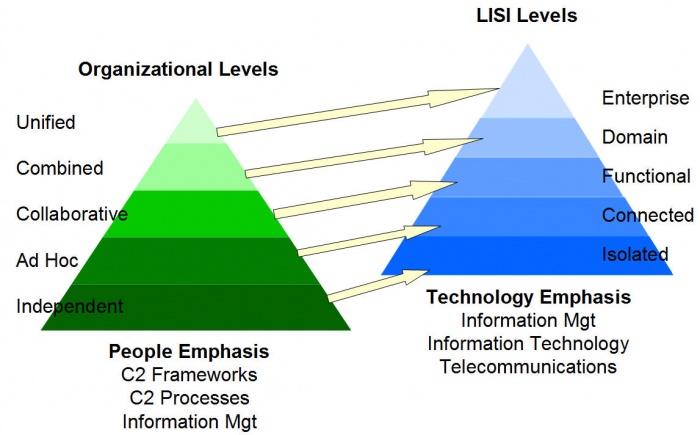

4.4.3 Organizational Interoperability Maturity Model (OIMM)

LISI was later extended to the Organizational Interoperability Maturity Model (OIMM) for Command and Control (C2). Complementary to LISI's technology orientation, the OIMM describes organizational interoperability maturity. While research in this area was focused on military needs, it is not difficult to imagine how the OIMM's levels could be applied within a supply chain business environment.

| Level | Description |

|---|---|

| Level 0 – Independent | Organizations that do not share common goals or purposes, but may be required to interact on rare occasions |

| Level 1 – Ad hoc | Organizations that have some overarching shared goals, but interaction is minimal; there are no formal mechanisms for interacting, and organizational aspirations take precedence over shared goals |

| Level 2 – Collaborative | Frameworks are in place to support interoperability and there are shared goals, but organizations are distinct |

| Level 3 – Combined | Organizations interoperate habitually with shared understanding, value systems, and goals, but there are still residual attachments to a home organization |

| Level 4 – Unified | The organization is interoperating continually with common value systems, goals, command structure/style, and knowledge |

Figure 7. Operational Levels vs. LISI Levels [8]

The OIMM is similar to the proposed Government Interoperability Model Matrix (GIMM), [9] which was designed for assessing an organization's current e-government interoperability capabilities. The GIMM also has five levels (Independent, Ad Hoc, Collaborative, Integrated, and Unified), resembling the OIMM, and is closely aligned with the Software Engineering Institute's Capability Maturity Model.

4.4.4 Information Systems Interoperability Maturity Model (ISIMM)

The Information Systems Interoperability Maturity Model (ISIMM) grew out of the Nigerian government's need to achieve interoperability. Its focus is technical interoperability of information systems by addressing four areas:

- Data interoperability

- Software interoperability

- Communication interoperability

- Physical interoperability

ISIMM has five levels, which are reminiscent of the GIMM levels.

| Level | Description |

|---|---|

| Level 1 – Manual | Unconnected |

| Level 2 – Ad Hoc | Point to point on an ad hoc basis |

| Level 3 – Collaborative | Basic collaboration between independent applications with shared logical data models but not shared data |

| Level 4 – Integrated | Data is shared and exchanged among applications based on domain-based data models |

| Level 5 – Unified | Data and applications are fully shared and distributed; data is commonly interpreted and based on a common exchange model |

4.5 Governmental Frameworks for Interoperability

Surveying available governmental frameworks is useful for understanding how interoperability on a large scale has been approached. The efforts mentioned below represent a wide range of approaches, all of which can contribute to interoperability at various levels. As might be expected, the GreenField approach is often taken in the most ambitious projects, thus avoiding the many challenges highlighted earlier. On the other hand, some of these have taken into account the need to build on what already exists.

The European Commission is addressing both organizational and technology interoperability with the [European Interoperability Framework (EIF). EIF was established to support the pan-European delivery of electronic government services. Its purview includes organizational, semantic, and technical interoperability (EIF, section 2.1.2).

Organizational interoperability in EIF targets the managers of e-government projects in member state administrations and EU bodies. The EU's initiative for interoperability for European public services provides resources for the development of a single digital semantic space, allowing information to be exchanged across European borders in support of a single European market. The EU has promoted the establishment of core concepts [10] and vocabularies and the Asset Description Metadata Schema (ADMS) as part of the semantic interoperability initiative.

The open data movement has led to many government attempts to publish data they collect that might be of interest to the public and to link these data sets via standardized reference data. There are already many sources of data, in many standard reference datasets worldwide, available for sharing. An example is the set of North American Industry Classification System (NAISC) codes established in industries, which standardize statistics reporting on industry, manufacturing, agriculture, mining, and other industries.

In the US, government-promoted interoperability is most evident in health informatics, particularly for electronic health records. Several private organizations are tackling important aspects of the issue. For example, errors can occur when patients cross a healthcare-setting boundary like being discharged from the hospital or moving to a different facility if the appropriate medical information does not follow the patient across the transition boundary with them. Reliable interoperability allows information to flow freely among EMRs, imaging, cardiology, administrative, and other HIT systems.

The National Information Exchange Model (NIEM) is used across the US and for information sharing with Canada and Mexico. Similarly, the Australian Government Technical Interoperability Framework specifies a conceptual model and agreed technical standards that support collaboration between Australian government agencies.

NATO has long pursued interoperability of its member nations' communication and simulation systems. The SISO standards organization works closely with the IEEE Computer Society to define standards for simulation interoperability. NATO itself develops interoperability profiles [11] that identify essential profile elements including capability requirements and other architectural views, characteristic protocols, implementation options, technical standards, service interoperability points, and the relationship with other profiles such as the system profile to which an application belongs.

All of these governmental projects provide insights into how to design for interoperability.

5 Summary

Although interoperability has been recognized as a crucial need by the military and by governmental organizations worldwide for some time, it has only recently attained criticality in other enterprises. The desire for interoperability was initially driven by supply-chain management and now by big data and the IoT. Almost all models of interoperability for systems, whether of people and processes or of systems, have posited similar stages of maturity in interoperability. For the EIT organization moving upward in its interoperability maturity, it is essential to study what others have done, and then understand the challenges, analyze the enterprise's strategic needs, identify the desired end state, and map out the projects needed to achieve that end state.

6 Key Maturity Frameworks

Capability maturity for EIT refers to its ability to reliably perform. Maturity is measured by an organization's readiness and capability expressed through its people, processes, data, and technologies and the consistent measurement practices that are in place. See Appendix F for additional information about maturity frameworks.

Many specialized frameworks have been developed since the original Capability Maturity Model (CMM) that was developed by the Software Engineering Institute in the late 1980s. This section describes how some of those apply to the activities described in this chapter.

6.1 IT-Capability Maturity Framework (IT-CMF)

The IT-CMF was developed by the Innovation Value Institute in Ireland. This framework helps organizations to measure, develop, and monitor their EIT capability maturity progression. It consists of 35 EIT management capabilities that are organized into four macro capabilities:

- Managing EIT like a business

- Managing the EIT budget

- Managing the EIT capability

- Managing EIT for business value

Each has five different levels of maturity starting from initial to optimizing. The most relevant critical capability is enterprise architecture management (EAM).

6.1.1 Enterprise Architecture Management Maturity

The following statements provide a high-level overview of the enterprise architecture management (EAM) capability at successive levels of maturity.

| Level 1 | EA is conducted within the context of individual projects, by applying one-off principles and methods within those projects. |

| Level 2 | A limited number of basic architecture artifacts and practices are emerging in certain EIT domains or key projects. |

| Level 3 | A common suite of EA principles and methods are shared across the EIT function, allowing a unifying vision of EA to emerge. |

| Level 4 | Planning by the EIT function and the rest of the business consistently leverages enterprise-wide architecture principles and methods to enable efficiency and agility across the organization. |

| Level 5 | EA principles and methods are continually reviewed to maintain their ability to deliver business value. |

7 Key Competence Frameworks

While many large companies have defined their own sets of skills for purposes of talent management (to recruit, retain, and further develop the highest quality staff members that they can find, afford and hire), the advancement of EIT professionalism will require common definitions of EIT skills that can be used not just across enterprises, but also across countries. We have selected three major sources of skill definitions. While none of them is used universally, they provide a good cross-section of options.

Creating mappings between these frameworks and our chapters is challenging, because they come from different perspectives and have different goals. There is rarely a 100 percent correspondence between the frameworks and our chapters, and, despite careful consideration some subjectivity was used to create the mappings. Please take that in consideration as you review them.

7.1 Skills Framework for the Information Age

The Skills Framework for the Information Age (SFIA) has defined nearly 100 skills. SFIA describes seven levels of competency that can be applied to each skill. However, not all skills cover all seven levels. Some reach only partially up the seven-step ladder. Others are based on mastering foundational skills, and start at the fourth or fifth level of competency. SFIA is used in nearly 200 countries, from Britain to South Africa, South America, to the Pacific Rim, to the United States. (http://www.sfia-online.org)

SFIA skills have not yet been defined for this chapter.

| Skill | Skill Description | Competency Levels |

|---|---|---|

| Solution architecture | The design and communication of high-level structures to enable and guide the design and development of integrated solutions that meet current and future business needs. In addition to technology components, solution architecture encompasses changes to service, process, organization, and operating models. Architecture definition must demonstrate how requirements (such as automation of business processes) are met, any requirements that are not fully met, and any options or considerations that require a business decision. The provision of comprehensive guidance on the development of, and modifications to, solution components to ensure that they take account of relevant architectures, strategies, policies, standards, and practices (including security) and that existing and planned solution components remain compatible. | 5-6 |

| Information assurance | The protection of integrity, availability, authenticity, non-repudiation, and confidentiality of information and data in storage and in transit. The management of risk in a pragmatic and cost-effective manner to ensure stakeholder confidence. | 5-7 |

| Data management | The management of practices and processes to ensure the security, integrity, safety, and availability of all forms of data and data structures that make up the organization's information. The management of data and information in all its forms and the analysis of information structure (including logical analysis of taxonomies, data, and metadata). The development of innovative ways of managing the information assets of the organization. | 2-6 |

| Emerging technology monitoring | The identification of new and emerging hardware, software and communication technologies and products, services, methods, and techniques and the assessment of their relevance and potential value as business enablers, improvements in cost/performance, or sustainability. The promotion of emerging technology awareness among staff and business management. | 5-6 |

| Enterprise and business architecture | The creation, iteration, and maintenance of structures such as enterprise and business architectures embodying the key principles, methods, and models that describe the organization's future state, and that enable its evolution. This typically involves the interpretation of business goals and drivers; the translation of business strategy and objectives into an "operating model"; the strategic assessment of current capabilities; the identification of required changes in capabilities; and the description of inter-relationships between people, organization, service, process, data, information, technology, and the external environment.

The architecture development process supports the formation of the constraints, standards, and guiding principles necessary to define, ensure, and govern the required evolution; this facilitates change in the organization's structure, business processes, systems, and infrastructure in order to achieve predictable transition to the intended state. | 5-7 |

| Systems integration | The incremental and logical integration and testing of components/subsystems and their interfaces in order to create operational services. | 5-6 |

| EIT management | The management of the EIT infrastructure and resources required to plan for, develop, deliver, and support EIT services and products to meet the needs of a business. The preparation for new or changed services, management of the change process, and the maintenance of regulatory, legal, and professional standards. The management of performance of systems and services in terms of their contribution to business performance and their financial costs and sustainability. The management of bought-in services. The development of continual service improvement plans to ensure the EIT infrastructure adequately supports business needs. | 5-7 |

| Systems development management | The management of resources in order to plan, estimate, and carry out programs of solution development work to time, budget, and quality targets and in accordance with appropriate standards, methods, and procedures (including secure software development).The facilitation of improvements by changing approaches and working practices, typically using recognized models, best practices, standards, and methodologies. The provision of advice, assistance, and leadership in improving the quality of software development, by focusing on process definition, management, repeatability, and measurement. | 5-7 |

| Data analysis | The investigation, evaluation, interpretation, and classification of data, in order to define and clarify information structures that describe the relationships between real world entities. Such structures facilitate the development of software systems, links between systems, or retrieval activities. | 2-5 |

7.2 European Competency Framework

The European Union's European e-Competence Framework (e-CF) has 40 competences and is used by a large number of companies, qualification providers, and others in public and private sectors across the EU. It uses five levels of competence proficiency (e-1 to e-5). No competence is subject to all five levels.

The e-CF is published and legally owned by CEN, the European Committee for Standardization, and its National Member Bodies (www.cen.eu). Its creation and maintenance has been co-financed and politically supported by the European Commission, in particular, DG (Directorate General) Enterprise and Industry, with contributions from the EU ICT multi-stakeholder community, to support competitiveness, innovation, and job creation in European industry. The Commission works on a number of initiatives to boost ICT skills in the workforce. Version 1.0 to 3.0 were published as CEN Workshop Agreements (CWA). The e-CF 3.0 CWA 16234-1 was published as an official European Norm (EN), EN 16234-1. For complete information, see http://www.ecompetences.eu.

| e-CF Dimension 2 | e-CF Dimension 3 |

|---|---|

| A.5. Architectural Design (PLAN) Specifies, refines, updates, and makes available a formal approach to implement solutions necessary to develop and operate the IS architecture. Identifies change requirements and the components involved: hardware, software, applications, processes, information, and technology platform. Takes into account interoperability, scalability, usability, and security. Maintains alignment between business evolution and technology developments. | Level 3-5 |

| B.2. Component Integration (BUILD) Integrates hardware, software, or subsystem components into an existing or new system. Complies with established processes and procedures, such as configuration management and package maintenance. Takes into account the compatibility of existing and new modules to ensure system integrity, system interoperability, and information security. Verifies and tests system capacity and performance and documentation of successful integration. | Level 2-4 |

7.3 i Competency Dictionary

The Information Technology Promotion Agency (IPA) of Japan has developed the i Competency Dictionary (iCD) and translated it into English, and describes it at https://www.ipa.go.jp/english/humandev/icd.html. The iCD is an extensive skills and tasks database, used in Japan and southeast Asian countries. It establishes a taxonomy of tasks and the skills required to perform the tasks. The IPA is also responsible for the Information Technology Engineers Examination (ITEE), which has grown into one of the largest scale national examinations in Japan, with approximately 600,000 applicants each year.

The iCD consists of a Task Dictionary and a Skill Dictionary. Skills for a specific task are identified via a "Task x Skill" table. (See Appendix A for the task layer and skill layer structures.) EITBOK activities in each chapter require several tasks in the Task Dictionary.

The table below shows a sample task from iCD Task Dictionary Layer 2 (with Layer 1 in parentheses) that corresponds to activities in this chapter. It also shows the Layer 2 (Skill Classification), Layer 3 (Skill Item), and Layer 4 (knowledge item from the IPA Body of Knowledge) prerequisite skills associated with the sample task, as identified by the Task x Skill Table of the iCD Skill Dictionary. The complete iCD Task Dictionary (Layer 1-4) and Skill Dictionary (Layer 1-4) can be obtained by returning the request form provided at http://www.ipa.go.jp/english/humandev/icd.html.

| Task Dictionary | Skill Dictionary | ||

|---|---|---|---|

| Task Layer 1 (Task Layer 2) | Skill Classification | Skill Item | Associated Knowledge Items |

| Systems architecture design (system requirements definition and architecture design) |

System architecting technology | Systems Interoperability |

|

8 Key Roles

The following roles are common to ITSM:

- Applications Analyst

- Compliance Manager

- Demand Manager

- Enterprise Architect

- Information Security Manager

- Service Owner

- Technical Analyst

Other key roles include:

- Data Architect

- Development Manager

- Operations Manager

- Solution Architect

9 Standards

ISO 18435-1:2009 Industrial automation systems and integration—Diagnostics, capability assessment and maintenance applications integration—Part 1: Overview and general requirements

ISO/TR 18161:2013 Automation systems and integration—Applications integration approach using information exchange requirements modeling and software capability profiling

ISO 18435-3 Industrial automation systems and integration—Diagnostics, capability assessment and maintenance applications integration—Part 3: Applications integration description method

ISO 19439:2006 Enterprise integration—Framework for enterprise modeling

ISO 15926: 2009 Industrial automation systems and integration—Integration of life-cycle data for process plants including oil and gas production facilities

ISO/IEC 11179-1:2004 Framework (referred to as ISO/IEC 11179-1)

ISO/IEC 11179-2:2005 Classification

ISO/IEC 11179-3:2013 Registry metamodel and basic attributes

ISO/IEC 11179-4:2004 Formulation of data definitions

ISO/IEC 11179-5:2005 Naming and identification principles

ISO/IEC 11179-6:2005 Registration

ISO/IEC 10746-3:2009, Information technology—Open distributed processing—Reference model: Architecture

ISO/IEC TS 24748-6, Systems and software engineering—Lifecycle management—Part 6: Guide to system integration engineering

ISO/IEC/IEEE 26531:2015, Systems and software engineering—Content management for product life-cycle, user, and service management documentation

NOTE: Almost all EIT product and communication standards are concerned with interoperability. Among the most widely used is IEEE STD 802, IEEE Standard for Local and Metropolitan Area Networks (over 160 active parts).

ANSI/ISA-95.00.01-2000, Enterprise-Control System Integration

10 References

[1] ISO/IEC 25010:2011 Systems and software engineering—Systems and software Quality Requirements and Evaluation (SQuaRE)—System and software quality models, 4.2.3.2.

[2] Hervé Panetto, Arturo Molina. Enterprise integration and interoperability in manufacturing systems: Trends and issues. Computers in Industry, Elsevier, Volume 59, Issue 7, September 2008, Pages 641–646, http://www.sciencedirect.com/science/article/pii/S0166361508000353

[3] Julie Craig, Research Director, Application Management Enterprise Management Associates, Inc. www.enterprisemanagement.com

[4] http://www.en13606.org/the-ceniso-en13606-standard

[5] http://www.itu.int/en/ITU-T/ipr/Pages/open.aspx

[6] The DAMA Dictionary of Data Management, DAMA International, 2011.

[7] The DAMA Guide to the Data Management Body of Knowledge (DAMA-DMBOK), DAMA International, Technics Publications, April 1, 2009, ISBN-13: 978-1-935504-00-9.

[8] "Next Generation Data Interoperability: It's all About the Metadata," Leslie S. Winters, Michael M. Gorman, Dr. Andreas Tolk, Fall Simulation Interoperability Workshop, Orlando, FL, September 2006.

[9] Towards Standardizing Interoperability Levels for Information systems of Public Administration, Sarantis, S.; Charalibidis, Y.; Psarras, J. ELECTRONIC JOURNAL FOR E-COMMERCE TOOLS AND APPLICATIONS, May, 2008.

[10] A core concept is a simplified data model that captures the minimal, global characteristics/attributes of an entity in a generic, country and domain neutral fashion. It can be represented as core vocabulary using different formalisms (e.g., XML, RDF, JSON). See https://joinup.ec.europa.eu/community/semic/description.

[11] 1) NATO Architecture Framework Version 3. NATO C3 Agency. Copyright # 2007.

2) Information technology—Framework and taxonomy of International Standardized Profiles—Part 3: Principals and Taxonomy for Open System Environment Profiles. Copyright # 1998. ISO. ISO/IEC TR 10000-3.

3) NATO Interoperability Standards and Profiles Allied Data Publication 34, (ADatP-34(H)), Volume 3.

11 Related and Informing Disciplines

- Cloud computing

- Data science

- Enterprise architecture

- Network design

- Supply-chain management