Difference between revisions of "Quality"

| (37 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | < | + | <table border="3"> |

| − | + | <tr><td> | |

| − | <h2>Introduction | + | <table> |

| − | <p>Quality is a universal concept. Whether we are conscious of it or not, quality affects the creation, production, and consumption of all products and services. In this chapter, we look at quality in an [http://eitbokwiki.org/Glossary#eit | + | <tr> |

| − | <p>Quality is a critical concept that spans the software and systems development | + | <td width="60%"><font color="#246196">'''Welcome to the initial version of the EITBOK wiki. Like all wikis, it is a work in progress and may contain errors. We welcome feedback, edits, and real-world examples. [[Main_Page#How to Make Comments and Suggestions|Click here]] for instructions about how to send us feedback.''' </font></td> |

| + | <td width="20%">[[File:Ieee logo 1.png|100px|center]]</td> | ||

| + | <td width="20%"> [[File:Acm_logo_3.png|175px|center]]</td> | ||

| + | </tr></table> | ||

| + | </td></tr></table> | ||

| + | <p> </p> | ||

| + | <h2>Introduction</h2> | ||

| + | <p>Quality is a universal concept. Whether we are conscious of it or not, quality affects the creation, production, and consumption of all products and services. In this chapter, we look at quality in an [http://eitbokwiki.org/Glossary#eit Enterprise information technology (EIT)] context, including what quality is and what that means for an EIT environment (rather than on ''[http://eitbokwiki.org/Glossary#qms quality management systems]''). [[#One|[1]]] Our focus is defining quality itself in such a way that its practical application is easily understood.</p> | ||

| + | <p>Quality is a critical concept that spans the software and systems development lifecycle. It does not simply live within the quality department, nor is it simply a stage that focuses on delivered products. Rather, it is a set of concepts and ideas that ensure that the product, service, or system being delivered creates the greatest sustainable value when taking account of the various needs of all the [http://eitbokwiki.org/Glossary#stakeholder stakeholders] involved. Quality is about more than just how ''good'' a product is.</p> | ||

<p>For consumers of EIT services and products in the enterprise, including those in-stream services and products that contribute to the delivery or creation of other EIT services and products, the idea of quality is inextricably bound with expectations (whether formal, informal, or non-formal). As more high-tech capabilities have become available to consumers at large, expectations for [http://eitbokwiki.org/Glossary#eit EIT] have risen considerably. For this reason, it is crucial that EIT works with their enterprise consumers to understand expectations (and for consumers to understand what can reasonably be delivered).</p> | <p>For consumers of EIT services and products in the enterprise, including those in-stream services and products that contribute to the delivery or creation of other EIT services and products, the idea of quality is inextricably bound with expectations (whether formal, informal, or non-formal). As more high-tech capabilities have become available to consumers at large, expectations for [http://eitbokwiki.org/Glossary#eit EIT] have risen considerably. For this reason, it is crucial that EIT works with their enterprise consumers to understand expectations (and for consumers to understand what can reasonably be delivered).</p> | ||

<h2>Goals and Guiding Principles</h2> | <h2>Goals and Guiding Principles</h2> | ||

| − | <p>The first goal of any organization is to clarify, understand, and then satisfy the | + | <p>The first goal of any organization is to clarify, understand, and then satisfy the stakeholders' expectations of the products and services they receive. To do this, it is necessary to enable the organization to make ''information-based trade-offs'' when budgets, schedules, technical [http://eitbokwiki.org/Glossary#constraint constraints], or other exigencies do not allow all expectations to be fully met. </p> |

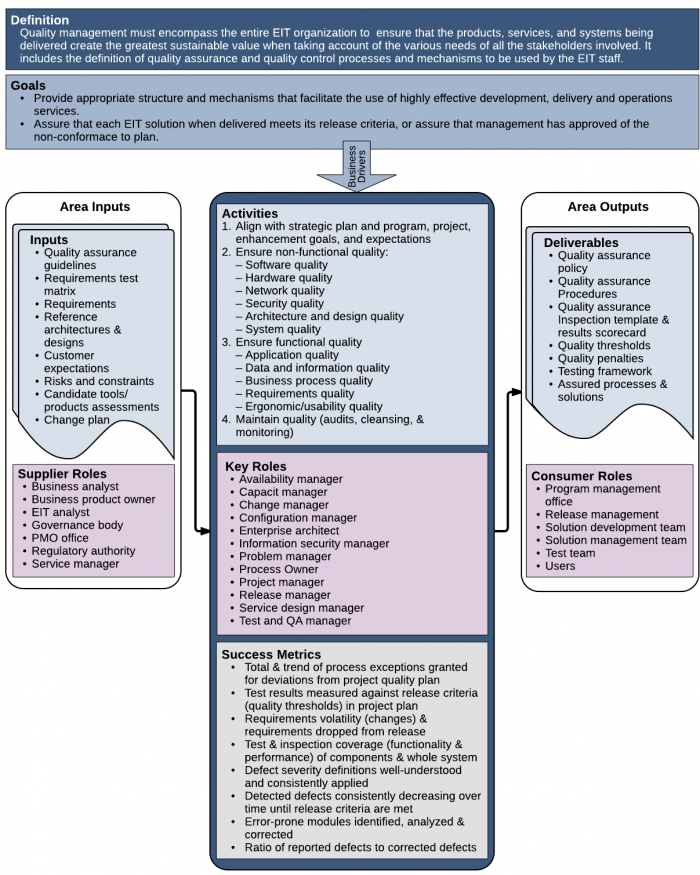

| − | <p>ISO/IEC/IEEE describes quality as the | + | <p>The quality function in the EIT organization includes far more than just testing new or changed services. Its overall responsibility is to provide appropriate structure (usually processes) and mechanisms for EIT personnel that lead to highly effective development, delivery, and operations services. Its specific responsibility for each project that will deliver new or changed services is to ensure that the service's intended users agree with the project's release criteria and that products are not released unless those criteria are met.</p> |

| + | <p>ISO/IEC/IEEE describes quality as the "ability of a product, service, system, component, or process to meet customer or user needs, expectations, or requirements." [[#Two|[2]]] This statement incorporates a number of important principles:</p> | ||

<ul> | <ul> | ||

| − | <li>Quality is not about what we ''do'' | + | <li>Quality is not about what we ''do''—it is about what the customers/users/stakeholders ''get''. [[#Three|[3]]] </li> |

<li>Quality is not absolute, but is always relative to the particular context.</li> | <li>Quality is not absolute, but is always relative to the particular context.</li> | ||

| − | <li>Quality, as an ''ability'' (or a set of | + | <li>Quality, as an ''ability'' (or a set of "-ilities"), is ''observable'', and so is something we can measure and evaluate.</li> |

<li>Quality is about what the users (or stakeholders) ''expect'', as much as what they might formally ''require''.</li> | <li>Quality is about what the users (or stakeholders) ''expect'', as much as what they might formally ''require''.</li> | ||

</ul> | </ul> | ||

| − | <p>This last point is echoed by respected writer on business and entrepreneurism | + | <p>This last point is echoed by Peter Drucker, a respected writer on business and entrepreneurism, who wrote, "'Quality' in a product or service is not what the supplier puts in. It is what the customer gets out and is willing to pay for. A product is not 'quality' because it is hard to make and costs a lot of money, as manufacturers typically believe. That is incompetence. Customers pay only for what is of use to them and gives them value. Nothing else constitutes 'quality'." [[#Four|[4]]]</p> |

<h2>Context Diagram</h2> | <h2>Context Diagram</h2> | ||

| − | [[File: | + | <p>[[File:06 Quality CD.png|700px]]<br />'''Figure 1. Context Diagram for Quality'''</p> |

| − | < | + | |

<h2>Key Quality Concepts</h2> | <h2>Key Quality Concepts</h2> | ||

<h3>Measuring Quality</h3> | <h3>Measuring Quality</h3> | ||

| − | <p>Quality is measurable. By measuring quality, aside from knowing | + | <p>Quality is measurable. Quality in a product or service is comprised of a set of measurable attributes, such as height, weight, color, reliability, speed, and perceived "friendliness." Attributes are selected for a given product's quality set based on the end users' requirements. The quality models described below provide common sets of attributes for EIT products and services.</p> |

| − | <p>An important principle from management theory is | + | <p>By measuring quality attributes as the product is developed and tested, aside from knowing whether progress to meet the release criteria is being made, and whether it is increasing (or decreasing), we can use the measurement information to make the kinds of information-based trade-offs that organizations often need to make. Measuring quality also supports the application of accepted management practices to the goal of achieving, improving, and increasing quality.</p> |

| + | <p>An important principle from management theory is "what you measure is what you get." Thus, it is an important principle that product quality should be measured. ''Measurement of product quality'' is focused on knowing whether, and to what degree, expectations are satisfied or requirements are met. An example from agile would be to know whether a component meets its "definition of done." [[#Five|[5]]]</p> | ||

<h3>Requirements Management</h3> | <h3>Requirements Management</h3> | ||

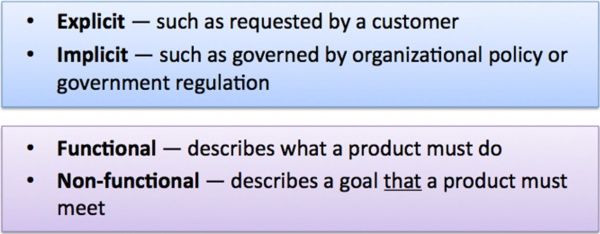

<p>To be able to measure product quality requires that the organization is capable of good ''requirements management''. Requirements management comprises the set of processes that an organization uses to gather, assess, evaluate, quantify, document, prioritize, communicate, and, ultimately, deliver the requirements of its stakeholders. Requirements might be those illustrated in the graphic below.</p> | <p>To be able to measure product quality requires that the organization is capable of good ''requirements management''. Requirements management comprises the set of processes that an organization uses to gather, assess, evaluate, quantify, document, prioritize, communicate, and, ultimately, deliver the requirements of its stakeholders. Requirements might be those illustrated in the graphic below.</p> | ||

| − | [[File:RequirementTypeOptions.jpg|600px]] | + | <p>[[File:RequirementTypeOptions.jpg|600px]]<br />'''Figure 2. Requirement Type Options'''</p> |

| − | < | + | |

<p>Key goals of requirements management are to ensure that requirements are unambiguously stated, are universally agreed, and are understood in terms of their value to stakeholders. </p> | <p>Key goals of requirements management are to ensure that requirements are unambiguously stated, are universally agreed, and are understood in terms of their value to stakeholders. </p> | ||

<p>Because requirements are fundamental to the delivery of a quality product, ''quality models'' (see [[#QualityModels|Quality Models]]) are generally used as an aid to ensure that requirements statements are complete and comprehensive.</p> | <p>Because requirements are fundamental to the delivery of a quality product, ''quality models'' (see [[#QualityModels|Quality Models]]) are generally used as an aid to ensure that requirements statements are complete and comprehensive.</p> | ||

<h3>Quality Capability</h3> | <h3>Quality Capability</h3> | ||

| − | <p>''Quality capability'' is the capacity and ability of the | + | <p>''Quality capability'' is the capacity and ability of the organization's processes, procedures, methods, controls, and governance to deliver quality products.</p> |

<p>In order to be confident about satisfying stakeholder requirements, the organization must also ''measure'' its quality capability, and, therefore, measure the quality of its processes, procedures, methods, controls, standards, and so on. The established way to create quality capability is by first introducing ''standard practices'' so that all participants in the process know what to expect, and to ensure that these practices are well understood and comprehensively adopted.</p> | <p>In order to be confident about satisfying stakeholder requirements, the organization must also ''measure'' its quality capability, and, therefore, measure the quality of its processes, procedures, methods, controls, standards, and so on. The established way to create quality capability is by first introducing ''standard practices'' so that all participants in the process know what to expect, and to ensure that these practices are well understood and comprehensively adopted.</p> | ||

| − | <p>An | + | <p>An organization's quality capability often reflects its maturity. In a mature EIT organization, its members have a well-understood concept of their common processes and share a common vocabulary for talking about those processes. Various organizations have published versions of EIT maturity models, but there is a lack of general adoption of any model. In addition to making it easier for people to get their jobs done, standard practices also make it possible to methodically collect and implement ''lessons learned'' at specific points in a development project or at regular times during EIT production operations. This reflects the principle of ''continuous improvement''.</p> |

<h3>QMS and QC vs. QA</h3> | <h3>QMS and QC vs. QA</h3> | ||

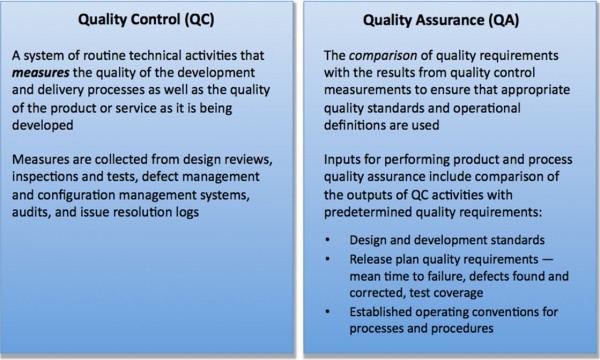

| − | <p>The fundamental approach to achieving quality products and services is to develop processes and mechanisms for [http://eitbokwiki.org/Glossary#qc quality control (QC)] and for [http://eitbokwiki.org/Glossary#qa quality assurance (QA)]. These are normally expressed as an | + | <p>The fundamental approach to achieving quality products and services is to develop processes and mechanisms for [http://eitbokwiki.org/Glossary#qc quality control (QC)] and for [http://eitbokwiki.org/Glossary#qa quality assurance (QA)]. These are normally expressed as an organization's ''[http://eitbokwiki.org/Glossary#qms quality management system (QMS)]'', which is the collection of an organization's business processes, quality policies, and quality objectives directed toward meeting customer requirements</p> |

<p>Although the terms QC and QA are often used interchangeably, there is a very meaningful difference between them as shown below.</p> | <p>Although the terms QC and QA are often used interchangeably, there is a very meaningful difference between them as shown below.</p> | ||

| − | [[File:QA_QC.jpg|600px]] | + | <p>[[File:QA_QC.jpg|600px]]<br />'''Figure 3. QC vs. QC'''</p> |

| − | < | + | |

<p>A common misconception is that QA and ''testing'' are equivalent. In fact, testing is one of the tools of QC, because the aim of testing is the detection and quantification of errors, which then results in defect reports. By assessing information from defect reports (as part of QA), EIT can determine whether it is meeting its quality objectives.</p> | <p>A common misconception is that QA and ''testing'' are equivalent. In fact, testing is one of the tools of QC, because the aim of testing is the detection and quantification of errors, which then results in defect reports. By assessing information from defect reports (as part of QA), EIT can determine whether it is meeting its quality objectives.</p> | ||

<h3>Technical Debt and the Cost of Rework </h3> | <h3>Technical Debt and the Cost of Rework </h3> | ||

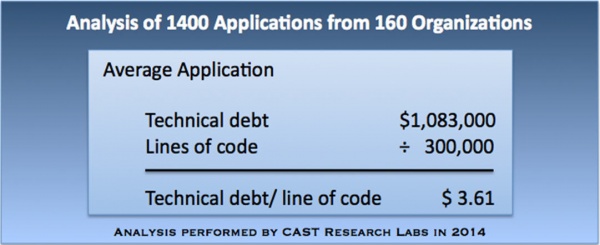

| − | <p>For most organizations, software production is based on a balance, or set of compromises between requirements, budget provided, and time available. Whenever a decision is made to limit (or omit or defer) engineering work due to budgetary or time [http://eitbokwiki.org/Glossary#constraint constraints], the resulting loss in quality (quantified as the amount of rework that would need to be done later to rectify the compromise) is commonly referred to as ''technical debt''. [[#Six|[6]]] In essence, technical debt accrues when a | + | <p>For most organizations, software production is based on a balance, or set of compromises between requirements, budget provided, and time available. Whenever a decision is made to limit (or omit or defer) engineering work due to budgetary or time [http://eitbokwiki.org/Glossary#constraint constraints], the resulting loss in quality (quantified as the amount of rework that would need to be done later to rectify the compromise) is commonly referred to as ''technical debt''. [[#Six|[6]]] In essence, technical debt accrues when a "pay now or pay later" situation results in a "pay later" decision. Typical causes of technical debt include:</p> |

<ul> | <ul> | ||

<li>The tendency to decide consciously ''not'' to fix known minor bugs prior to delivery because of time (or other) pressures</li> | <li>The tendency to decide consciously ''not'' to fix known minor bugs prior to delivery because of time (or other) pressures</li> | ||

<li>A lack of knowledge about how much, and what kinds of, testing should be done prior to releasing a system into operational status, resulting in errors not being found until the system goes into production</li> | <li>A lack of knowledge about how much, and what kinds of, testing should be done prior to releasing a system into operational status, resulting in errors not being found until the system goes into production</li> | ||

</ul> | </ul> | ||

| − | <p>Technical debt is referred to as a ''debt'' because the applied design or construction approach (often called a | + | <p>Technical debt is referred to as a ''debt'' because the applied design or construction approach (often called a "kludge"), while expedient in the short term, creates a technical context in which the same work will likely have to be redone in the future—incurring a future cost (or debt). The total cost of such rework is typically greater than the cost of doing the work now (including increased cost over time), and escalates as technical debt accumulates.</p> |

<p>The cost of rework is usually seen in EIT as fire-fighting. Hidden bugs from poorly designed or built code appear suddenly, usually at the worst possible time, precipitating an urgent fix. People must drop everything to get things running normally again.</p> | <p>The cost of rework is usually seen in EIT as fire-fighting. Hidden bugs from poorly designed or built code appear suddenly, usually at the worst possible time, precipitating an urgent fix. People must drop everything to get things running normally again.</p> | ||

| − | <p>The total cost in such situations is both in the work done to fix the bug as well as in the work that had to be dropped so that the bug could be fixed. Technical debt, however just refers to how much it would cost to fix the poor quality issue at hand; it | + | <p>The total cost in such situations is both in the work done to fix the bug as well as in the work that had to be dropped so that the bug could be fixed. Technical debt, however just refers to how much it would cost to fix the poor quality issue at hand; it doesn't include the lost opportunity inherent in new work that had to be put off.</p> |

| − | <p>While estimates | + | <p>While estimates vary, some studies suggest that maintenance activities—most of which are rework of some kind—can account for up to 80 percent of an EIT budget. An inevitable result of this kind of cost burden is that fewer resources are left available to fulfill requests to create new capabilities in support of new business opportunities.</p> |

| − | <p>Based on | + | <p>Based on "the analysis of 1400 applications containing 550 million lines of code submitted by 160 organizations, CRL estimates that the technical debt of an average-sized application of 300,000 lines of code (LOC) is $1,083,000. This represents ''an average technical debt per LOC of $3.61''." [[#Seven|[7]]] CRL considered only those problems that are "highly likely to cause severe business disruption," not all problems. Several studies have undertaken efforts to quantify the economic impact of technical debt. According to Gartner research (as reported by Deloitte University Press, http://dupress.com/articles/2014-tech-trends-technical-debt-reversal/) "… global EIT debt [in 2014] is estimated to stand at $500 billion, with the potential to rise to $1 trillion in 2015."</p> |

| − | [[File:ApplicationAnalysis.jpg|600px]] | + | <p>[[File:ApplicationAnalysis.jpg|600px]]<br />'''Figure 4. Application Analysis'''</p> |

| − | < | + | |

<h2>Product and Service Requirements</h2> | <h2>Product and Service Requirements</h2> | ||

<p>Everyone understands that EIT must work to provide specified ''functional'' requirements, but few understand non-functional quality requirements. While functional requirements describe the functions (often called ''features'') that a product should have (the things it should do), non-functional requirements describe requisite qualities (attributes) of the [http://eitbokwiki.org/Glossary#solution solution] that are necessary to deliver the functionality. [[#Eight|[8]]] Requirements may also be implicit, rather than explicit; for example, a feature may require interfacing with another application in order to deliver the information requested by the user, but the user may not know what other systems the application needs to talk to in order to get the information. Non-functional requirements are also used to judge the operation of the system, and as such are an important part of the requirements gathering and analysis process.</p> | <p>Everyone understands that EIT must work to provide specified ''functional'' requirements, but few understand non-functional quality requirements. While functional requirements describe the functions (often called ''features'') that a product should have (the things it should do), non-functional requirements describe requisite qualities (attributes) of the [http://eitbokwiki.org/Glossary#solution solution] that are necessary to deliver the functionality. [[#Eight|[8]]] Requirements may also be implicit, rather than explicit; for example, a feature may require interfacing with another application in order to deliver the information requested by the user, but the user may not know what other systems the application needs to talk to in order to get the information. Non-functional requirements are also used to judge the operation of the system, and as such are an important part of the requirements gathering and analysis process.</p> | ||

| − | <p>To ensure that all requirements are appropriately considered in product implementation, it is essential that appropriate quality measures are also selected in discussion with the consumer of the product. This facilitates an objective evaluation of product quality. Determining the most appropriate measures requires defining the attributes that are most important. For example, a software product used for creating calendar entries or mailing lists may not need the same degree of timeliness, accuracy, and precision as software used in safety critical applications. Requirements for high levels of certain quality ''attributes'' are more prevalent in critical enterprise systems that have impact on productivity, communication and revenues, and in the protection of personal, financial, or business proprietary information. </p> | + | <p>To ensure that all requirements are appropriately considered in product implementation, it is essential that appropriate quality measures are also selected in discussion with the consumer of the product. This facilitates an objective evaluation of product quality. Determining the most appropriate measures requires defining the attributes that are most important. For example, a software product used for creating calendar entries or mailing lists may not need the same degree of timeliness, accuracy, and precision as software used in safety-critical applications. Requirements for high levels of certain quality ''attributes'' are more prevalent in critical enterprise systems that have impact on productivity, communication, and revenues, and in the protection of personal, financial, or business proprietary information. </p> |

<p>Quality requirements may be specified in [http://eitbokwiki.org/Glossary#sla service-level agreement (SLAs)] or in release criteria for introducing new capabilities. [[#QualityModels|Quality Models]] describes commonly accepted quality models, which can be used to help identify the required quality attributes (the quality requirements) to be specified in SLAs and release criteria. </p> | <p>Quality requirements may be specified in [http://eitbokwiki.org/Glossary#sla service-level agreement (SLAs)] or in release criteria for introducing new capabilities. [[#QualityModels|Quality Models]] describes commonly accepted quality models, which can be used to help identify the required quality attributes (the quality requirements) to be specified in SLAs and release criteria. </p> | ||

<h2>Planning for Quality</h2> | <h2>Planning for Quality</h2> | ||

| − | <p>Quality rarely happens by accident; if it does, it is even more rarely repeatable. Organizations that consistently achieve high quality do so by preparing the groundwork through appropriate planning. Quality planning is the process of "identifying which quality standards are relevant to the project and determining how to satisfy them. | + | <p>Quality rarely happens by accident; if it does, it is even more rarely repeatable. Organizations that consistently achieve high quality do so by preparing the groundwork through appropriate planning. Quality planning is the process of "identifying which quality standards are relevant to the project and determining how to satisfy them." Quality planning means planning how to fulfill process and product (deliverable) quality requirements.</p> |

| − | <p>Creating a quality plan for a product or project is related to, but different from, the task of creating a project plan. | + | <p>Creating a quality plan for a product or project is related to, but different from, the task of creating a project plan. A quality plan takes these factors into account:</p> |

<ul> | <ul> | ||

| − | <li>The | + | <li>The stakeholders' requirements must be fully understood and prioritized. </li> |

| − | <li>The | + | <li>The organization's appetite for accruing technical debt should be evaluated.</li> |

<li>The attributes that allow stakeholders to decide that they have received quality must be identified and agreed.</li> | <li>The attributes that allow stakeholders to decide that they have received quality must be identified and agreed.</li> | ||

<li>Measurements to demonstrate that the required attributes are present must be identified and established.</li> | <li>Measurements to demonstrate that the required attributes are present must be identified and established.</li> | ||

| − | <li> | + | <li>"Lessons learned" from past projects must be applied to the overall project plan.</li> |

</ul> | </ul> | ||

<h3>Inputs to Quality Planning</h3> | <h3>Inputs to Quality Planning</h3> | ||

| − | <p>Inputs include management information such as planned milestones or checkpoints in the development or acquisition plan, and the plans of other groups | + | <p>Inputs include management information such as planned milestones or checkpoints in the development or acquisition plan, and the plans of other groups that will participate in the delivery. To be effective, quality planning means knowing when the earliest possible opportunities for meaningful measurement will occur. The earlier that the quality control activities can measure artifacts, the earlier that quality assurance can start to compare the results to requirements. The earlier that issues are detected, the earlier that corrective action can be applied, which almost always means less rework downstream, at smaller cost, and with less technical debt.</p> |

| − | <p>QA and QC should be planned to occur as part of requirements specification, architecture and data design, and system development, as well as through configuration management, formal testing, and release into production, regardless of the chosen development approach (e.g., so called | + | <p>QA and QC should be planned to occur as part of requirements specification, architecture and data design, and system development, as well as through configuration management, formal testing, and release into production, regardless of the chosen development approach (e.g., so called "waterfall" vs. Agile [[#Nine|[9]]], and so on).</p> |

| − | <p>Four of the most important inputs to quality planning are service-level agreements (SLAs), customer relationship management programs, release criteria, and acceptance criteria. ''[http://eitbokwiki.org/Glossary#sla | + | <p>Four of the most important inputs to quality planning are service-level agreements (SLAs), customer relationship management (CRM) programs, release criteria, and acceptance criteria. ''[http://eitbokwiki.org/Glossary#sla SLAs]'' are one of the most common methods used for setting expectations, especially for delivery of EIT services. They are [http://eitbokwiki.org/Glossary#contract contracts] between service providers (e.g., software developers) and service consumers (e.g., customers), and typically exist as agreements between business managers and EIT management for the creation and delivery of software (or other products and services). They specify levels of service quality that business managers require. They are also hugely important where external vendors are involved. Typically, SLAs address such service-related items as business value expectations, security, performance (with metrics), incident management, and dispute resolution.</p> |

<p>The establishment of ''[http://eitbokwiki.org/Glossary#crm customer relationship management (CRM)]'' programs is becoming more common in EIT organizations. The purpose of CRM programs is to consistently monitor performance to SLAs, to provide a framework for ongoing assessment of user satisfaction, and to establish essential effective ongoing communications with customers.</p> | <p>The establishment of ''[http://eitbokwiki.org/Glossary#crm customer relationship management (CRM)]'' programs is becoming more common in EIT organizations. The purpose of CRM programs is to consistently monitor performance to SLAs, to provide a framework for ongoing assessment of user satisfaction, and to establish essential effective ongoing communications with customers.</p> | ||

| − | <p>Similar to SLAs, release criteria are also contracts between those who produce products and services and those who consume them. Again, ''release criteria'' are agreements between business managers in the enterprise and EIT management, and are also critically important to contracts with third-party vendors when acquiring a product or component. When initiating projects to deliver new products or services, the | + | <p>Similar to SLAs, release criteria are also contracts between those who produce products and services and those who consume them. Again, ''release criteria'' are agreements between business managers in the enterprise and EIT management, and are also critically important to contracts with third-party vendors when acquiring a product or component. When initiating projects to deliver new products or services, the expectations—the quality requirements—should be spelled out in the release criteria, so that they can be measured at each delivery point. In addition to requiring that all performance, functional, and similar requirements are verified [[#Ten|[10]]] to have been met and validated [[#Eleven|[11]]] to work as the user expects, a simple common release criterion is the stipulation that all highest importance (e.g., "Severity 1" and "Severity 2") defects must be corrected.</p> |

<p>Essential to each of these approaches is agreeing on what to measure, that is, identifying the key attributes of a service or product that should be measured in order to monitor its quality. In some EIT organizations, the project team may depend on the receiving organization to establish ''acceptance criteria''. In those cases, quality planning should embed those criteria in the quality plan, and adjust them as necessary to attain requisite measures.</p> | <p>Essential to each of these approaches is agreeing on what to measure, that is, identifying the key attributes of a service or product that should be measured in order to monitor its quality. In some EIT organizations, the project team may depend on the receiving organization to establish ''acceptance criteria''. In those cases, quality planning should embed those criteria in the quality plan, and adjust them as necessary to attain requisite measures.</p> | ||

<h4>The Quality Plan</h4> | <h4>The Quality Plan</h4> | ||

| − | <p>The quality plan, part of the overall project plan (though it may be a physically separate document), is the output from quality planning. While covering all | + | <p>The quality plan, part of the overall project plan (though it may be a physically separate document), is the output from quality planning. While covering all the areas presented above, at a minimum, it describes these criteria:</p> |

<ul> | <ul> | ||

<li>Product/service quality attributes linked to the release criteria or service levels specified in an SLA</li> | <li>Product/service quality attributes linked to the release criteria or service levels specified in an SLA</li> | ||

<li>Measurements for each attribute, with range of [http://eitbokwiki.org/Glossary#acceptable acceptable] values</li> | <li>Measurements for each attribute, with range of [http://eitbokwiki.org/Glossary#acceptable acceptable] values</li> | ||

<li>How and when measurements are collected and stored</li> | <li>How and when measurements are collected and stored</li> | ||

| − | <li>Location of all relevant documents, such as test plans, checklists, management plans | + | <li>Location of all relevant documents, such as test plans, checklists, and management plans</li> |

<li>Specific process improvements to be implemented</li> | <li>Specific process improvements to be implemented</li> | ||

<li>Resources responsible for the above</li> | <li>Resources responsible for the above</li> | ||

</ul> | </ul> | ||

<div id="QualityModels"></div><h2>Quality Models</h2> | <div id="QualityModels"></div><h2>Quality Models</h2> | ||

| − | <p>Because quality is typically thought of very subjectively (and thus as very different from other factors affecting software delivery, such as resources | + | <p>Because quality is typically thought of very subjectively (and thus as very different from other factors affecting software delivery, such as resources or time), practical need saw the development of various quality models by many large high-tech companies. In the US, the national drive to higher quality was captured in the US government's Baldrige National Quality Program and its Award in 1987. We list a few of the most common quality models below. (They are contained in ISO/IEC 25010:2010.)</p> |

| − | <p> | + | <p>IBM's [http://eitbokwiki.org/Glossary#cuprmd CUPRMD] (pronounced "cooperMD") specified capability, usability, performance, reliability, maintainability, and documentation as key quality vectors for product quality. This was a good start, as it provided a framework of measurable product characteristics for assessing when a product was good enough to ship.</p> |

| − | <p>Under Bob | + | <p>Under Bob Grady's [[#Twelve|[12]]] impetus, HP developed [http://eitbokwiki.org/Glossary#flurps FLURPS] (functionality, localizability, usability, reliability, performance, supportability) and its successor, FLURPS+, which enabled different parts of the company to emphasize particular qualities on top of the four FLURPS categories.</p> |

| − | <p>In the UK, ICL introduced the Strategic Quality Model as part of its quality improvement process (developed with Philip Crosby). The SQM was | + | <p>In the UK, ICL introduced the Strategic Quality Model (SQM) as part of its quality improvement process (developed with Philip Crosby). The SQM was ICL's implementation of the European Quality Award model (created by the European Foundation for Quality Management).</p> |

<p>Standardization efforts for software product metrics began in the 1990s. After several rounds of evolution, FRUEMPS (ISO/IEC/IEEE Std 25010) is the current product quality model. Its perspectives can be applied to hardware and software systems as well as to software products. ISO/IEC/IEEE Std 25010 also presents a model for quality in use, while ISO/IEC 25012 provides a complementary data-quality model.</p> | <p>Standardization efforts for software product metrics began in the 1990s. After several rounds of evolution, FRUEMPS (ISO/IEC/IEEE Std 25010) is the current product quality model. Its perspectives can be applied to hardware and software systems as well as to software products. ISO/IEC/IEEE Std 25010 also presents a model for quality in use, while ISO/IEC 25012 provides a complementary data-quality model.</p> | ||

<h3>The Product Quality Model</h3> | <h3>The Product Quality Model</h3> | ||

| − | <p>The Product Quality | + | <p>The Product Quality model (ISO/IEC 25010:2010) focuses on internal and external perspectives of a product (or other organizational output, such as a service or system). This model can be used by those specifying or evaluating software and systems, and can be applied from the perspective of their [http://eitbokwiki.org/Glossary#acquisition acquisition], requirements, development, use, evaluation, support, maintenance, quality assurance and control, and audit. The model can also be a very useful checklist when determining the adequacy of a set of a requirements.</p> |

<p>The quality ''attributes'' or characteristics of the product that can be assessed or measured, which are commonly abbreviated as ''FRUEMPS'' are shown in the graphic below.</p> | <p>The quality ''attributes'' or characteristics of the product that can be assessed or measured, which are commonly abbreviated as ''FRUEMPS'' are shown in the graphic below.</p> | ||

| − | [[File:QualityAttributes.jpg|600px]] | + | <p>[[File:QualityAttributes.jpg|600px]]<br />'''Figure 5. Quality Attributes'''</p> |

| − | < | + | |

<h3>The Quality in Use Model</h3> | <h3>The Quality in Use Model</h3> | ||

| − | <p>The Quality in Use | + | <p>The Quality in Use model (ISO/IEC 25010:2011) takes a different perspective. Rather than focusing on the product, it focuses on the ''effect'' of the product in a given context of use and the experience of using it. It stipulates the following characteristics [[#Thirteen|[13]]] for measuring the quality of a product in use:</p> |

<ol type="I"> | <ol type="I"> | ||

| − | <li>'''Effectiveness''' | + | <li>'''Effectiveness'''—The product does what the user needs (what the user wants it to do)</li> |

| − | <li>'''Efficiency''' | + | <li>'''Efficiency'''—The product does not impede the task at hand.</li> |

| − | <li>'''Satisfaction''' | + | <li>'''Satisfaction'''—The user appreciates the product's usefulness, trusts it, and finds pleasure and comfort in using it.</li> |

| − | <li>'''Freedom from [http://eitbokwiki.org/Glossary#risk risk]''' | + | <li>'''Freedom from [http://eitbokwiki.org/Glossary#risk risk]'''—The product mitigates economic risk, health and safety risk, and environmental risk.</li> |

| − | <li>'''Context coverage''' | + | <li>'''Context coverage'''—The context of the product is complete.</li> |

</ol> | </ol> | ||

<h3>The Data Quality Model</h3> | <h3>The Data Quality Model</h3> | ||

| − | <p>A | + | <p>A user's experience of quality depends on how efficiently and effectively a software product works. In most cases, the correct working of a product depends on data. Therefore, it is important, from a software development perspective, to pay attention to the quality of that data. The Data Quality model (ISO/IEC 25012:2008) applies to structured data acquired, manipulated, or used by a computer system to satisfy users' needs, including data that can be shared within the same computer system or by different computer systems. Data quality concerns itself with such characteristics as accuracy, completeness, consistency, credibility, currency, and precision, as well as various data usage characteristics such as accessibility, availability, efficiency, and understandability. </p> |

<h2>Summary</h2> | <h2>Summary</h2> | ||

| − | <p>Quality is an important concept in software and service development. It is defined as the degree to which stakeholder requirements and expectations have been satisfied. While | + | <p>Quality is an important concept in software and service development. It is defined as the degree to which stakeholder requirements and expectations have been satisfied. While quality is primarily concerned with requirements satisfaction, it also has some impact on how software products and services are created: creating a quality product is dependent on the quality of underlying tools, methods, processes, and resources. A mature ''quality capability'' underpins the delivery of quality products and services.</p> |

| − | <p>Quality, as defined, is measurable. Achieving quality, then, is strongly supported through the use of a robust ''quality plan'', which specifies which ''quality controls'' (measures) | + | <p>Quality, as defined, is measurable. Achieving quality, then, is strongly supported through the use of a robust ''quality plan'', which specifies which ''quality controls'' (measures) are used by ''quality assurance'' to guide product development to maximizing ''quality'' (and avoiding technical debt).</p> |

| − | <p>It is important to understand that quality is not the act of doing ''quality management'', ''quality control'', or ''quality assurance'', though these are important tools. Rather, quality is something that is perceived by the recipient of the product or service. Because of this, effective requirements management | + | <p>It is important to understand that quality is not the act of doing ''quality management'', ''quality control'', or ''quality assurance'', though these are important tools. Rather, quality is something that is perceived by the recipient of the product or service. Because of this, effective requirements management with clear acceptance criteria is critical to success.</p> |

<p>Finally, making appropriate use of the various ''quality models'' is a great way of ensuring that a quality plan has taken account of all essential factors.</p> | <p>Finally, making appropriate use of the various ''quality models'' is a great way of ensuring that a quality plan has taken account of all essential factors.</p> | ||

| − | <h2> | + | <h2> Key Maturity Frameworks</h2> |

| − | <p> | + | <p>Capability maturity for EIT refers to its ability to reliably perform. Maturity is a measured by an organization's readiness and capability expressed through its people, processes, data, and technologies and the consistent measurement practices that are in place. See [http://eitbokwiki.org/Enterprise_IT_Maturity_Assessments Appendix F] for additional information about maturity frameworks.</p> |

| − | < | + | <p>Many specialized frameworks have been developed since the original Capability Maturity Model (CMM) that was developed by the Software Engineering Institute in the late 1980s. This section describes how some of those apply to the activities described in this chapter. </p> |

| − | <p> | + | <h3>IT-Capability Maturity Framework (IT-CMF) </h3> |

| − | <p> | + | <p>The IT-CMF was developed by the Innovation Value Institute in Ireland. This framework helps organizations to measure, develop, and monitor their EIT capability maturity progression. It consists of 35 EIT management capabilities that are organized into four macro capabilities: </p> |

| + | <ul> | ||

| + | <li>Managing EIT like a business</li> | ||

| + | <li>Managing the EIT budget</li> | ||

| + | <li>Managing the EIT capability</li> | ||

| + | <li>Managing EIT for business value</li> | ||

| + | </ul> | ||

| + | <p>The two most relevant critical capabilities are organization design and planning (ODP) and IT leadership and governance (ITG). </p> | ||

| + | <h4>Organization Design and Planning Maturity</h4> | ||

| + | <p>The following statements provide a high-level overview of the organization design and planning (ODP) capability at successive levels of maturity.</p> | ||

| + | <table> | ||

| + | <tr valign="top"> | ||

| + | <td width="10%">Level 1</td> | ||

| + | <td>The organization structure of the EIT function is not well defined or is defined in an ad hoc manner. Internal structures are unclear, as are the interfaces between the EIT function and other business units, suppliers, and business partners. </td> | ||

| + | </tr> | ||

| + | <tr valign="top"> | ||

| + | <td>Level 2</td> | ||

| + | <td>A basic organization structure is defined for the EIT function, focused primarily on internal roles, responsibilities, and accountabilities. A limited number of key interfaces between EIT and other business units, suppliers, and business partners are designed. </td> | ||

| + | </tr> | ||

| + | <tr valign="top"> | ||

| + | <td>Level 3</td> | ||

| + | <td>The EIT function's organization structure is harmonized across internal functions, with a primary focus on enabling the mission and values of the business. Most interfaces between EIT and other business units, suppliers, and business partners are designed. </td> | ||

| + | </tr> | ||

| + | <tr valign="top"> | ||

| + | <td>Level 4</td> | ||

| + | <td>The EIT function is able to adopt a range of organization structures simultaneously, from industrialized EIT to innovation centers, in support of the organization's strategic direction. All interfaces to entities external to the EIT function are designed. </td> | ||

| + | </tr> | ||

| + | <tr valign="top"> | ||

| + | <td>Level 5</td> | ||

| + | <td>The organization structures adopted by the EIT function are continually reviewed, taking into account insights from industry benchmarks. All interfaces are continually reviewed and adapted in response to changes in the business environment. </td> | ||

| + | </tr> | ||

| + | </table> | ||

| + | <h4>IT Leadership and Governance Maturity</h4> | ||

| + | <p>The following statements provide a high-level overview of the IT leadership and governance (ITG) capability at successive levels of maturity.</p> | ||

| + | <table> | ||

| + | <tr valign="top"> | ||

| + | <td width="10%">Level 1</td> | ||

| + | <td>IT leadership and governance are non-existent or are carried out in an ad hoc manner. </td></tr> | ||

| + | <tr valign="top"><td>Level 2</td><td>Leadership with respect to a unifying purpose and direction for EIT is beginning to emerge. Some decision rules and governance bodies are in place, but these are typically not applied or considered in a consistent manner.</td></tr> | ||

| + | <tr valign="top"><td>Level 3</td><td>Leadership instills commitment to a common purpose and direction for EIT across the EIT function and some other business units. EIT decision-making forums collectively oversee key EIT decisions and monitor performance of the EIT function. </td></tr> | ||

| + | <tr valign="top"><td>Level 4</td><td>Leadership instills commitment to a common purpose and direction for EIT across the organization. Both the EIT function and other business units are held accountable for the outcomes from EIT. </td></tr> | ||

| + | <tr valign="top"><td>Level 5</td><td>EIT governance is fully integrated into the corporate governance model, and governance approaches are continually reviewed for improvement, regularly including insights from relevant business ecosystem partners.</td></tr> | ||

| + | </table> | ||

| + | <h2> Key Competence Frameworks</h2> | ||

| + | <p>While many large companies have defined their own sets of skills for purposes of talent management (to recruit, retain, and further develop the highest quality staff members that they can find, afford and hire), the advancement of EIT professionalism will require common definitions of EIT skills that can be used not just across enterprises, but also across countries. We have selected three major sources of skill definitions. While none of them is used universally, they provide a good cross-section of options. </p> | ||

| + | <p>Creating mappings between these frameworks and our chapters is challenging, because they come from different perspectives and have different goals. There is rarely a 100 percent correspondence between the frameworks and our chapters, and, despite careful consideration some subjectivity was used to create the mappings. Please take that in consideration as you review them.</p> | ||

| + | <h3>Skills Framework for the Information Age</h3> | ||

| + | <p>The Skills Framework for the Information Age (SFIA) has defined nearly 100 skills. SFIA describes seven levels of competency that can be applied to each skill. However, not all skills cover all seven levels. Some reach only partially up the seven-step ladder. Others are based on mastering foundational skills, and start at the fourth or fifth level of competency. SFIA is used in nearly 200 countries, from Britain to South Africa, South America, to the Pacific Rim, to the United States. (http://www.sfia-online.org)</p> | ||

| + | <table cellpadding="5" border="1"> | ||

| + | <tr valign="top"><th style="background-color: #58ACFA;"><font color="white">Skill</font></th><th style="background-color: #58ACFA;"><font color="white">Skill Description</font></th><th width="10%" style="background-color: #58ACFA;"><font color="white">Competency Levels</font></th></tr> | ||

| + | <tr valign="top"><td>Quality management</td><td>The application of techniques for the monitoring and improving of quality for any aspect of a function or process. The achievement of, maintenance of, and compliance to national and international standards, as appropriate, and to internal policies, including those relating to sustainability and security.</td><td valign="top">4-7</td></tr> | ||

| + | <tr valign="top"><td>Quality assurance</td><td>The process of ensuring that the agreed quality standards within an organization are adhered to and that best practice is promulgated throughout the organization.</td><td valign="top">3-6</td></tr> | ||

| + | <tr valign="top"><td>Quality standards</td><td>The development, maintenance, control, and distribution of quality standards.</td><td valign="top">2-5</td></tr> | ||

| + | <tr valign="top"><td>Testing</td><td>The planning, design, management, execution, and reporting of tests, using appropriate testing tools and techniques and conforming to agreed process standards and industry-specific regulations. The purpose of testing is to ensure that new and amended systems, configurations, packages, or services, together with any interfaces, perform as specified (including security requirements), and that the risks associated with deployment are adequately understood and documented. Testing includes the process of engineering, using and maintaining testware (test cases, test scripts, test reports, test plans, etc.) to measure and improve the quality of the software being tested.</td><td valign="top">1-6</td></tr> | ||

| + | <tr valign="top"><td>Skill</td><td>The planning, design, management, execution, and reporting of tests, using appropriate testing tools and techniques and conforming to agreed process standards and industry-specific regulations. The purpose of testing is to ensure that new and amended systems, configurations, packages, or services, together with any interfaces, perform as specified (including security requirements), and that the risks associated with deployment are adequately understood and documented. Testing includes the process of engineering, using and maintaining testware (test cases, test scripts, test reports, test plans, etc.) to measure and improve the quality of the software being tested.</td><td valign="top">1-6</td></tr> | ||

| + | <tr valign="top"><td>Methods and tools</td><td>Ensuring that appropriate methods and tools for the planning, development, testing, operation, management, and maintenance of systems are adopted and used effectively throughout the organization.</td><td valign="top">4-6</td></tr> | ||

| + | <tr valign="top"><td>Business process testing</td><td>The planning, design, management, execution, and reporting of business process tests and usability evaluations. The application of evaluation skills to the assessment of the ergonomics, usability, and fitness for purpose of defined processes. This includes the synthesis of test tasks to be performed (from statement of user needs and user interface specification), the design of an evaluation program, the selection of user samples, the analysis of performance, and inputting results to the development team.</td><td valign="top">4-6</td></tr> | ||

| + | <tr valign="top"><td>Systems integration</td><td>The incremental and logical integration and testing of components/subsystems and their interfaces in order to create operational services.</td><td valign="top">2-6</td></tr> | ||

| + | <tr valign="top"><td>Conformance review</td><td>The independent assessment of the conformity of any activity, process, deliverable, product, or service to the criteria of specified standards, best practice, or other documented requirements. May relate to, for example, asset management, network security tools, firewalls and internet security, sustainability, real-time systems, application design, and specific certifications.</td><td valign="top">3-6</td></tr> | ||

| + | <tr valign="top"><td>User experience evaluation</td><td>Evaluation of systems, products, or services to ensure that the stakeholder and organizational requirements have been met, required practice has been followed, and systems in use continue to meet organizational and user needs. Iterative assessment (from early prototypes to final live implementation) of effectiveness, efficiency, user satisfaction, health and safety, and accessibility to measure or improve the usability of new or existing processes, with the intention of achieving optimum levels of product or service usability.</td><td valign="top">2-6</td></tr> | ||

| + | </table> | ||

| + | <h3>European Competency Framework</h3> | ||

| + | <p>The European Union's European e-Competence Framework (e-CF) has 40 competences and is used by a large number of companies, qualification providers, and others in public and private sectors across the EU. It uses five levels of competence proficiency (e-1 to e-5). No competence is subject to all five levels.</p> | ||

| + | <p>The e-CF is published and legally owned by CEN, the European Committee for Standardization, and its National Member Bodies (www.cen.eu). Its creation and maintenance has been co-financed and politically supported by the European Commission, in particular, DG (Directorate General) Enterprise and Industry, with contributions from the EU ICT multi-stakeholder community, to support competitiveness, innovation, and job creation in European industry. The Commission works on a number of initiatives to boost ICT skills in the workforce. Version 1.0 to 3.0 were published as CEN Workshop Agreements (CWA). The e-CF 3.0 CWA 16234-1 was published as an official European Norm (EN), EN 16234-1. For complete information, see http://www.ecompetences.eu. </p> | ||

| + | <table cellpadding="5" border="1"> | ||

| + | <tr valign="top"><th width="85%" style="background-color: #58ACFA;"><font color="white">e-CF Dimension 2</font></th><th width="15%" style="background-color: #58ACFA;"><font color="white">e-CF Dimension 3</font></th></tr> | ||

| + | <tr valign="top"><td><strong>D.2. ICT Quality Strategy Development (Enable)</strong><br />Defines, improves, and refines a formal strategy to satisfy customer expectations and improve business performance (balance between cost and risks). Identifies critical processes influencing service delivery and product performance for definition in the ICT quality management system. Uses defined standards to formulate objectives for service management and product and process quality. Identifies ICT quality management accountability.</td><td valign="top">Level 4-5</td></tr> | ||

| + | <tr valign="top"><td><strong>E.5. Process Improvement (MANAGE)</strong><br />Measures effectiveness of existing ICT processes. Researches and benchmarks ICT process design from a variety of sources. Follows a systematic methodology to evaluate, design, and implement process or technology changes for measurable business benefit. Assesses potential adverse consequences of process change.</td><td valign="top">Level 3-4</td></tr> | ||

| + | <tr valign="top"><td><strong>E.6. ICT Quality Management (MANAGE)</strong><br />Implements ICT quality policy to maintain and enhance service and product provision. Plans and defines indicators to manage quality with respect to ICT strategy. Reviews quality measures and recommends enhancements to influence continuous quality improvement.</td><td valign="top">Level 2-4</td></tr> | ||

| + | </table> | ||

| + | <h3>i Competency Dictionary </h3> | ||

| + | <p>The Information Technology Promotion Agency (IPA) of Japan has developed the i Competency Dictionary (iCD) and translated it into English, and describes it at https://www.ipa.go.jp/english/humandev/icd.html. The iCD is an extensive skills and tasks database, used in Japan and southeast Asian countries. It establishes a taxonomy of tasks and the skills required to perform the tasks. The IPA is also responsible for the Information Technology Engineers Examination (ITEE), which has grown into one of the largest scale national examinations in Japan, with approximately 600,000 applicants each year.</p> | ||

| + | <p>The iCD consists of a Task Dictionary and a Skill Dictionary. Skills for a specific task are identified via a "Task x Skill" table. (See [http://eitbokwiki.org/Glossary Appendix A] for the task layer and skill layer structures.) EITBOK activities in each chapter require several tasks in the Task Dictionary. </p> | ||

| + | <p>The table below shows a sample task from iCD Task Dictionary Layer 2 (with Layer 1 in parentheses) that corresponds to activities in this chapter. It also shows the Layer 2 (Skill Classification), Layer 3 (Skill Item), and Layer 4 (knowledge item from the IPA Body of Knowledge) prerequisite skills associated with the sample task, as identified by the Task x Skill Table of the iCD Skill Dictionary. The complete iCD Task Dictionary (Layer 1-4) and Skill Dictionary (Layer 1-4) can be obtained by returning the request form provided at http://www.ipa.go.jp/english/humandev/icd.html. </p> | ||

| + | <table cellpadding="5" border="1"> | ||

| + | <tr valign="top"><th width="15%" style="background-color: #58ACFA;" font-size="14pt"><font color="white">Task Dictionary</font></th><th colspan="3" style="background-color: #58ACFA;" font-size="14pt"><font color="white">Skill Dictionary</font></th></tr> | ||

| + | <tr valign="top"><th width="30%" style="background-color: #58ACFA;"><font color="white">Task Layer 1 (Task Layer 2)</font></th><th width="15%" style="background-color: #58ACFA;"><font color="white">Skill Classification</font></th><th width="15%" style="background-color: #58ACFA;"><font color="white">Skill Item</font></th><th width="40%" style="background-color: #58ACFA;"><font color="white">Associated Knowledge Items</font></th></tr> | ||

| + | <tr valign="top"><td><em><strong>Quality management control <br />(quality management)</strong></em></td><td valign="top">Quality management methods</td><td valign="top">Quality assurance methods</td><td> <ul> | ||

| + | <li>Independent form of IV&V organization</li> | ||

| + | <li>Independent V&V (IV&V )</li> | ||

| + | <li>V&V plan</li> | ||

| + | <li>V&V (IEEE 610.12)</li> | ||

| + | <li>Stress test </li> | ||

| + | <li>Usability </li> | ||

| + | <li>Techniques </li> | ||

| + | <li>Verification (IEEE 610.12, 1012)</li> | ||

| + | <li>Verification (ISO 9000)</li> | ||

| + | <li>Acceptance/contract conformity</li> | ||

| + | <li>Performance </li> | ||

| + | <li>Validation (IEEE 610.12, 1012)</li> | ||

| + | <li>Validation (ISO 9000)</li> | ||

| + | <li>Standard specification </li> | ||

| + | <li>Quality management </li> | ||

| + | <li>Concept of quality assurance </li> | ||

| + | </ul> | ||

| + | </td> | ||

| + | </tr> | ||

| + | </table> | ||

| + | <h2>Key Roles</h2> | ||

| + | <p>The following are typical ITSM roles:</p> | ||

| + | <ul> | ||

| + | <li>Availability Manager</li> | ||

| + | <li>Capacity Manager</li> | ||

| + | <li>Change Manager</li> | ||

| + | <li>Configuration Manager</li> | ||

| + | <li>Enterprise Architect</li> | ||

| + | <li>Information Security Manager</li> | ||

| + | <li>Problem Manager</li> | ||

| + | <li>Process Owner</li> | ||

| + | <li>Project Manager</li> | ||

| + | <li>Release Manager</li> | ||

| + | <li>Service Design Manager</li> | ||

| + | <li>Test and QA Manager</li> | ||

| + | </ul> | ||

<h2>Standards</h2> | <h2>Standards</h2> | ||

| − | <p> | + | <p>IEEE Std 730™-2014, IEEE Standard for Software Quality Assurance Processes</p> |

| − | <p> | + | <p>ISO 9000:2015, Quality management systems—Fundamentals and vocabulary</p> |

| + | <p>ISO 9001:2015, Quality management systems—Requirements </p> | ||

| + | <p>In addition, the SQuaRE series (ISO/IEC 250NN) includes over30 standards on Systems and software Quality Requirements and Evaluation (SQuaRE), such as:</p> | ||

| + | <p>ISO/IEC 25001:2014 Systems and software engineering—Systems and software Quality Requirements and Evaluation (SQuaRE)—Planning and management </p> | ||

| + | <p>ISO/IEC 25010:2011, Systems and software engineering—Systems and software Quality Requirements and Evaluation (SQuaRE)—System and software quality models </p> | ||

| + | <p>ISO/IEC 25012:2008, Software engineering—Software product Quality Requirements and Evaluation (SQuaRE)—Data quality model </p> | ||

| + | <p>ISO/IEC 25030:2007, Software engineering—Software product Quality Requirements and Evaluation (SQuaRE)—Quality requirements </p> | ||

<h2>References</h2> | <h2>References</h2> | ||

| − | <div id="One"></div><p>[1] For a detailed explanation of the [http://eitbokwiki.org/Glossary#qms quality management system (QMS)], there is the [http://eitbokwiki.org/Glossary#iso ISO] 9000 family of standards. ISO 9000:2005 Quality management | + | <div id="One"></div><p>[1] For a detailed explanation of the [http://eitbokwiki.org/Glossary#qms quality management system (QMS)], there is the [http://eitbokwiki.org/Glossary#iso ISO] 9000 family of standards. ISO 9000:2005 Quality management systems—Fundamentals and vocabulary provides a good introduction, and ISO 9001:2008 Quality management systems—Requirements (approximately 30 pages) describes the requirements for a formal quality management system. If it is important for your organization to be certified as ISO 9000-compliant, you want to learn about those standards. </p> |

<div id="Two"></div><p>[2] SEVOCAB (http://pascal.computer.org/sev_display/index.action), which is formalized as ISO/IEC/IEEE 24765.</p> | <div id="Two"></div><p>[2] SEVOCAB (http://pascal.computer.org/sev_display/index.action), which is formalized as ISO/IEC/IEEE 24765.</p> | ||

<div id="Three"></div><p>[3] What we do is the subject of quality management systems and their associated fields of quality control and quality assurance.</p> | <div id="Three"></div><p>[3] What we do is the subject of quality management systems and their associated fields of quality control and quality assurance.</p> | ||

<div id="Four"></div><p>[4] Drucker, P. F. (1986). ''Innovation and Entrepreneurship: Practice and Principles''. New York: Harper Row, p. 228.</p> | <div id="Four"></div><p>[4] Drucker, P. F. (1986). ''Innovation and Entrepreneurship: Practice and Principles''. New York: Harper Row, p. 228.</p> | ||

| − | <div id="Five"></div><p>[5] And knowing, of course, how complete is the | + | <div id="Five"></div><p>[5] And knowing, of course, how complete is the "definition of done" itself.</p> |

<div id="Six"></div><p>[6] Cunningham first coined the term ''technical debt'' in 1992 as a software design metaphor that highlights the underlying costs associated with the compromise between the short term gain of getting software out the door and getting the release done on time versus the long term viability of the software system. http://www.ontechnicaldebt.com/blog/ward-cunningham-capers-jones- a-discussion-on-technical-debt/ (accessed 8/26/2014).</p> | <div id="Six"></div><p>[6] Cunningham first coined the term ''technical debt'' in 1992 as a software design metaphor that highlights the underlying costs associated with the compromise between the short term gain of getting software out the door and getting the release done on time versus the long term viability of the software system. http://www.ontechnicaldebt.com/blog/ward-cunningham-capers-jones- a-discussion-on-technical-debt/ (accessed 8/26/2014).</p> | ||

<div id="Seven"></div><p>[7] http://www.castsoftware.com/research-labs/technical-debt-estimation (accessed 8/26/2014).</p> | <div id="Seven"></div><p>[7] http://www.castsoftware.com/research-labs/technical-debt-estimation (accessed 8/26/2014).</p> | ||

<div id="Eight"></div><p>[8] For a good list of non-functional requirements, check ISO/IEC/IEEE Std. 29148.</p> | <div id="Eight"></div><p>[8] For a good list of non-functional requirements, check ISO/IEC/IEEE Std. 29148.</p> | ||

<div id="Nine"></div><p>[9] Completion of QA and QC items should be an essential component of the definition of done.</p> | <div id="Nine"></div><p>[9] Completion of QA and QC items should be an essential component of the definition of done.</p> | ||

| − | <div id="Ten"></div><p>[10] Verification is the | + | <div id="Ten"></div><p>[10] Verification is the "process of providing objective evidence that the system, software, or hardware and its associated products conform to requirements (e.g., for correctness, completeness, consistency, and accuracy) for all lifecycle activities during each lifecycle process (acquisition, supply, development, operation, and maintenance), satisfy standards, practices, and conventions during lifecycle processes, and successfully complete each lifecycle activity and satisfy all the criteria for initiating succeeding lifecycle activities" IEEE 1012-2012 IEEE Standard for System and Software Verification and Validation).</p> |

| − | <div id="Eleven"></div><p>[11] Validation is the | + | <div id="Eleven"></div><p>[11] Validation is the "process of providing evidence that the system, software, or hardware and its associated products satisfy requirements allocated to it at the end of each lifecycle activity, solve the right problem (e.g., correctly model physical laws, implement business rules, and use the proper system assumptions), and, and satisfy intended use and user needs" (IEEE 1012-2012 IEEE Standard for System and Software Verification and Validation.</p> |

<div id="Twelve"></div><p>[12] Robert B. Grady, ''Practical Software Metrics for Project Management and Process Improvement'', Prentice Hall, 1992 (ISBN 0-13-72084-5).</p> | <div id="Twelve"></div><p>[12] Robert B. Grady, ''Practical Software Metrics for Project Management and Process Improvement'', Prentice Hall, 1992 (ISBN 0-13-72084-5).</p> | ||

<div id="Thirteen"></div><p>[13] Although flexibility is not included in this standard, it is often requested by stakeholders.</p> | <div id="Thirteen"></div><p>[13] Although flexibility is not included in this standard, it is often requested by stakeholders.</p> | ||

Latest revision as of 01:20, 23 December 2017

|

Contents

1 Introduction

Quality is a universal concept. Whether we are conscious of it or not, quality affects the creation, production, and consumption of all products and services. In this chapter, we look at quality in an Enterprise information technology (EIT) context, including what quality is and what that means for an EIT environment (rather than on quality management systems). [1] Our focus is defining quality itself in such a way that its practical application is easily understood.

Quality is a critical concept that spans the software and systems development lifecycle. It does not simply live within the quality department, nor is it simply a stage that focuses on delivered products. Rather, it is a set of concepts and ideas that ensure that the product, service, or system being delivered creates the greatest sustainable value when taking account of the various needs of all the stakeholders involved. Quality is about more than just how good a product is.

For consumers of EIT services and products in the enterprise, including those in-stream services and products that contribute to the delivery or creation of other EIT services and products, the idea of quality is inextricably bound with expectations (whether formal, informal, or non-formal). As more high-tech capabilities have become available to consumers at large, expectations for EIT have risen considerably. For this reason, it is crucial that EIT works with their enterprise consumers to understand expectations (and for consumers to understand what can reasonably be delivered).

2 Goals and Guiding Principles

The first goal of any organization is to clarify, understand, and then satisfy the stakeholders' expectations of the products and services they receive. To do this, it is necessary to enable the organization to make information-based trade-offs when budgets, schedules, technical constraints, or other exigencies do not allow all expectations to be fully met.

The quality function in the EIT organization includes far more than just testing new or changed services. Its overall responsibility is to provide appropriate structure (usually processes) and mechanisms for EIT personnel that lead to highly effective development, delivery, and operations services. Its specific responsibility for each project that will deliver new or changed services is to ensure that the service's intended users agree with the project's release criteria and that products are not released unless those criteria are met.

ISO/IEC/IEEE describes quality as the "ability of a product, service, system, component, or process to meet customer or user needs, expectations, or requirements." [2] This statement incorporates a number of important principles:

- Quality is not about what we do—it is about what the customers/users/stakeholders get. [3]

- Quality is not absolute, but is always relative to the particular context.

- Quality, as an ability (or a set of "-ilities"), is observable, and so is something we can measure and evaluate.

- Quality is about what the users (or stakeholders) expect, as much as what they might formally require.

This last point is echoed by Peter Drucker, a respected writer on business and entrepreneurism, who wrote, "'Quality' in a product or service is not what the supplier puts in. It is what the customer gets out and is willing to pay for. A product is not 'quality' because it is hard to make and costs a lot of money, as manufacturers typically believe. That is incompetence. Customers pay only for what is of use to them and gives them value. Nothing else constitutes 'quality'." [4]

3 Context Diagram

Figure 1. Context Diagram for Quality

4 Key Quality Concepts

4.1 Measuring Quality

Quality is measurable. Quality in a product or service is comprised of a set of measurable attributes, such as height, weight, color, reliability, speed, and perceived "friendliness." Attributes are selected for a given product's quality set based on the end users' requirements. The quality models described below provide common sets of attributes for EIT products and services.

By measuring quality attributes as the product is developed and tested, aside from knowing whether progress to meet the release criteria is being made, and whether it is increasing (or decreasing), we can use the measurement information to make the kinds of information-based trade-offs that organizations often need to make. Measuring quality also supports the application of accepted management practices to the goal of achieving, improving, and increasing quality.

An important principle from management theory is "what you measure is what you get." Thus, it is an important principle that product quality should be measured. Measurement of product quality is focused on knowing whether, and to what degree, expectations are satisfied or requirements are met. An example from agile would be to know whether a component meets its "definition of done." [5]

4.2 Requirements Management

To be able to measure product quality requires that the organization is capable of good requirements management. Requirements management comprises the set of processes that an organization uses to gather, assess, evaluate, quantify, document, prioritize, communicate, and, ultimately, deliver the requirements of its stakeholders. Requirements might be those illustrated in the graphic below.

Figure 2. Requirement Type Options

Key goals of requirements management are to ensure that requirements are unambiguously stated, are universally agreed, and are understood in terms of their value to stakeholders.

Because requirements are fundamental to the delivery of a quality product, quality models (see Quality Models) are generally used as an aid to ensure that requirements statements are complete and comprehensive.

4.3 Quality Capability

Quality capability is the capacity and ability of the organization's processes, procedures, methods, controls, and governance to deliver quality products.

In order to be confident about satisfying stakeholder requirements, the organization must also measure its quality capability, and, therefore, measure the quality of its processes, procedures, methods, controls, standards, and so on. The established way to create quality capability is by first introducing standard practices so that all participants in the process know what to expect, and to ensure that these practices are well understood and comprehensively adopted.

An organization's quality capability often reflects its maturity. In a mature EIT organization, its members have a well-understood concept of their common processes and share a common vocabulary for talking about those processes. Various organizations have published versions of EIT maturity models, but there is a lack of general adoption of any model. In addition to making it easier for people to get their jobs done, standard practices also make it possible to methodically collect and implement lessons learned at specific points in a development project or at regular times during EIT production operations. This reflects the principle of continuous improvement.

4.4 QMS and QC vs. QA

The fundamental approach to achieving quality products and services is to develop processes and mechanisms for quality control (QC) and for quality assurance (QA). These are normally expressed as an organization's quality management system (QMS), which is the collection of an organization's business processes, quality policies, and quality objectives directed toward meeting customer requirements

Although the terms QC and QA are often used interchangeably, there is a very meaningful difference between them as shown below.

A common misconception is that QA and testing are equivalent. In fact, testing is one of the tools of QC, because the aim of testing is the detection and quantification of errors, which then results in defect reports. By assessing information from defect reports (as part of QA), EIT can determine whether it is meeting its quality objectives.

4.5 Technical Debt and the Cost of Rework

For most organizations, software production is based on a balance, or set of compromises between requirements, budget provided, and time available. Whenever a decision is made to limit (or omit or defer) engineering work due to budgetary or time constraints, the resulting loss in quality (quantified as the amount of rework that would need to be done later to rectify the compromise) is commonly referred to as technical debt. [6] In essence, technical debt accrues when a "pay now or pay later" situation results in a "pay later" decision. Typical causes of technical debt include:

- The tendency to decide consciously not to fix known minor bugs prior to delivery because of time (or other) pressures

- A lack of knowledge about how much, and what kinds of, testing should be done prior to releasing a system into operational status, resulting in errors not being found until the system goes into production

Technical debt is referred to as a debt because the applied design or construction approach (often called a "kludge"), while expedient in the short term, creates a technical context in which the same work will likely have to be redone in the future—incurring a future cost (or debt). The total cost of such rework is typically greater than the cost of doing the work now (including increased cost over time), and escalates as technical debt accumulates.

The cost of rework is usually seen in EIT as fire-fighting. Hidden bugs from poorly designed or built code appear suddenly, usually at the worst possible time, precipitating an urgent fix. People must drop everything to get things running normally again.

The total cost in such situations is both in the work done to fix the bug as well as in the work that had to be dropped so that the bug could be fixed. Technical debt, however just refers to how much it would cost to fix the poor quality issue at hand; it doesn't include the lost opportunity inherent in new work that had to be put off.

While estimates vary, some studies suggest that maintenance activities—most of which are rework of some kind—can account for up to 80 percent of an EIT budget. An inevitable result of this kind of cost burden is that fewer resources are left available to fulfill requests to create new capabilities in support of new business opportunities.

Based on "the analysis of 1400 applications containing 550 million lines of code submitted by 160 organizations, CRL estimates that the technical debt of an average-sized application of 300,000 lines of code (LOC) is $1,083,000. This represents an average technical debt per LOC of $3.61." [7] CRL considered only those problems that are "highly likely to cause severe business disruption," not all problems. Several studies have undertaken efforts to quantify the economic impact of technical debt. According to Gartner research (as reported by Deloitte University Press, http://dupress.com/articles/2014-tech-trends-technical-debt-reversal/) "… global EIT debt [in 2014] is estimated to stand at $500 billion, with the potential to rise to $1 trillion in 2015."

Figure 4. Application Analysis

5 Product and Service Requirements